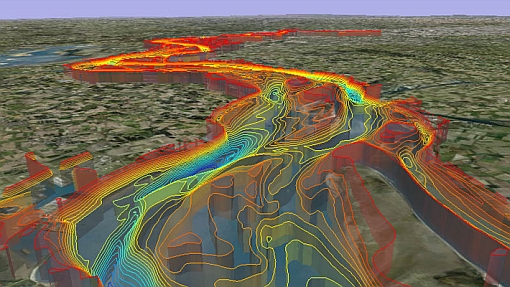

http://public.tableausoftware.com/views/StateCountyZip/PopulationByZip?:embed=y#2

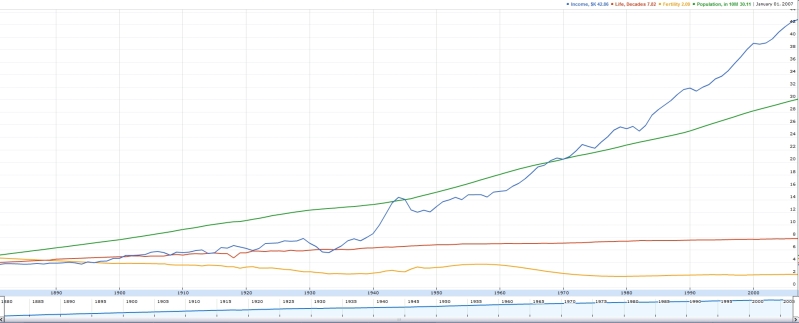

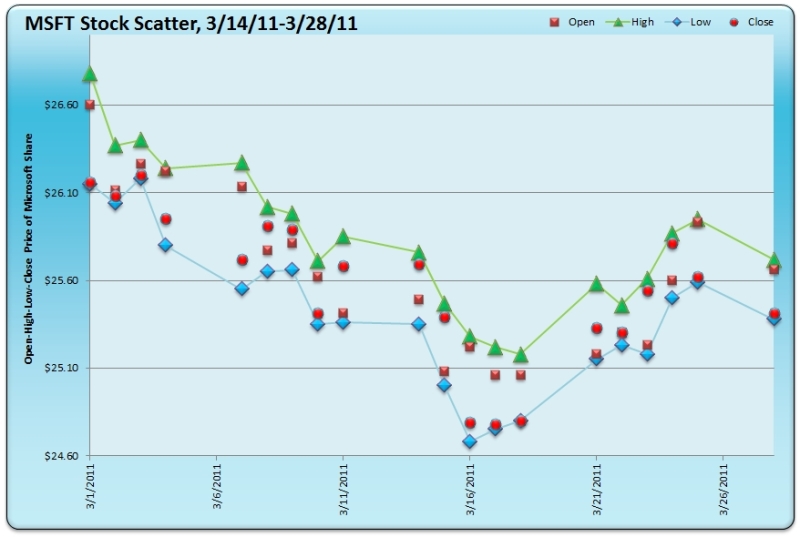

Inequality in USA, 1990-2011

Free rows from Market Capitalization DV Leader

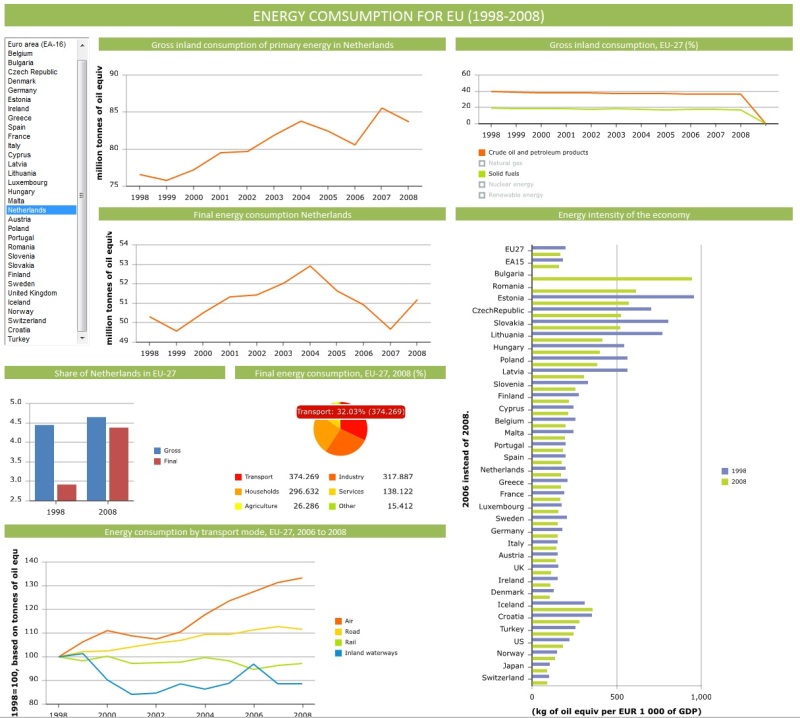

Last week Tableau increased by 10-fold the capacity of Data Visualizations published with Tableau Public to a cool 1 Million rows of Data, basically to the same amount of rows, which Excel 2007, 2010 and 2013 (often used as data sources for Tableau Public) can handle these days and increased by 20-fold the storage capacity (to 1GB of free storage) of each free Tableau Public Account, see it here:

http://www.tableausoftware.com/public/blog/2013/08/one-million-rows-2072

It means that free Tableau Public Account will have the storage twice larger than Spotfire Silver’s the most expensive Analyst Account (that one will cost you $4500/year). Tableau said: “Consider it a gift from us to you.”. I have to admit that even kids in this country know that there is nothing free here, so please kid me not – we are all witnessing of some kind of investment here – this type of investment worked brilliantly in the past… And all users of Tableau Public are investing too – with their time and learning efforts.

And this is not all: “For customers of Tableau Public Premium, which allows users to save locally and disable download of their workbooks, the limits have been increased to 10 million rows of data at 10GB of storage space” see it here:

http://www.tableausoftware.com/about/press-releases/2013/tableau-software-extends-tableau-public-1-million-rows-data without changing the price of service (of course in Tableau Public Premium price is not fixed and depends on the number of impressions).

Out of 100+ millions of Tableau users only 40000 qualified to be called Tableau Authors, see it here http://www.tableausoftware.com/about/press-releases/2013/tableau-software-launches-tableau-public-author-profiles so they are consuming Tableau Public’s Storage more actively then others. As an example you can see my Tableau’s Author Profile here: http://public.tableausoftware.com/profile/andrei5435#/ .

I will assume those Authors will consume 40000GB of online storage, which will cost to Tableau Software less then (my guess, I am open to correction from blog visitors) $20K/year just for the storage part of Tableau Public Service.

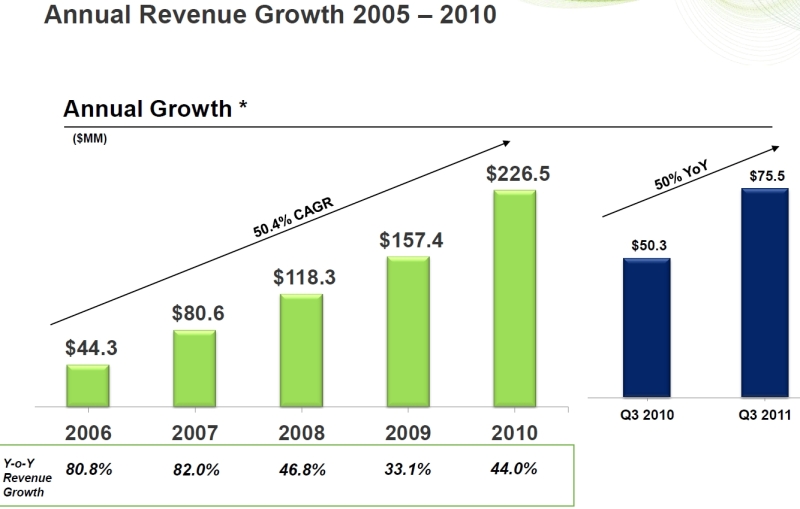

During the last week the other important announcement on 8/8/13 – Quarterly Revenue – came from Tableau: it reported the Q2 revenue of $49.9 million, up 71% year-over-year: http://investors.tableausoftware.com/investor-news/investor-news-details/2013/Tableau-Announces-Second-Quarter-2013-Financial-Results/default.aspx .

Please note that 71% is extremely good YoY growth compare with the entire anemic “BI industry”, but less then 100% YoY which Tableau grew in its private past.

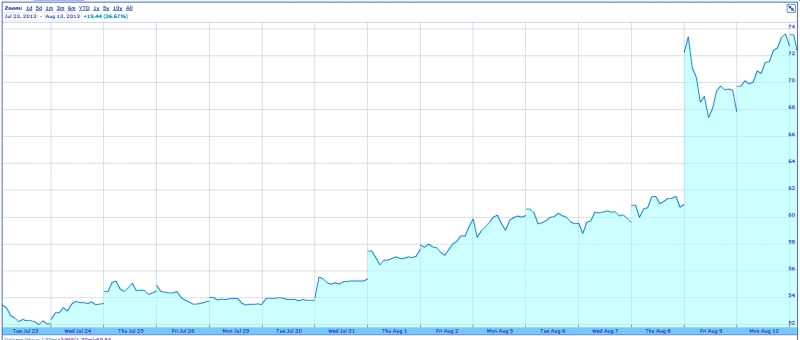

All these announcements above happened simultaneously with some magical (I have no theory why this happened; one weak theory is the investors madness and over-excitement about Q2 revenue of $49.9M announced on 8/8/13?) and sudden increase of the nominal price of Tableau Stock (under the DATA name on NYSE) from $56 (which is already high) on August 1st 2013 (announcement of 1 millions of rows/1GB storage for Tableau public Accounts) to $72+ today:

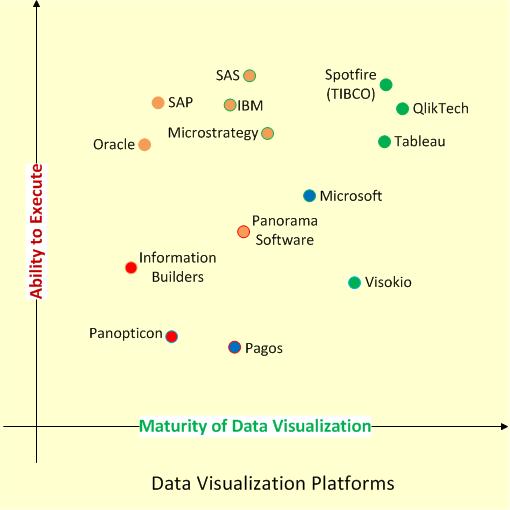

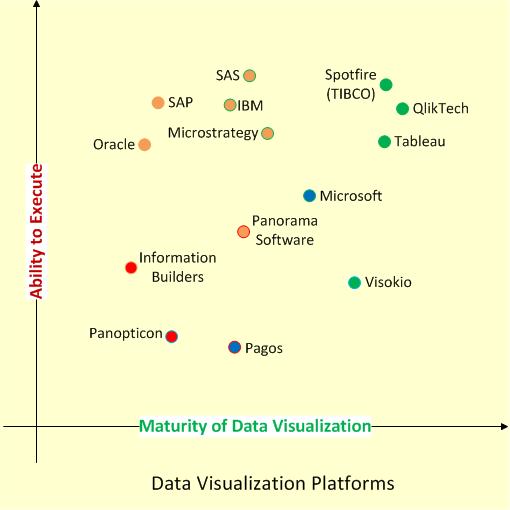

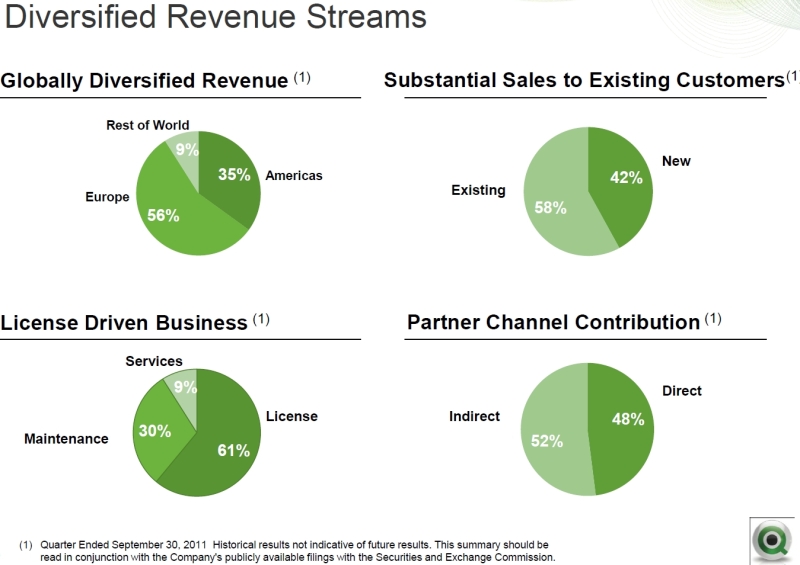

It means that the Market Capitalization of Tableau Software may be approaching $4B and sales may be $200M/year. For comparison, Tableau’s direct and more mature competitor Qliktech has now the Capitalization below $3B while its sales approaching almost $500M/year. From Market Capitalization point of view in 3 moths Tableau went from a private company to the largest Data Visualization publicly-traded software company on market!

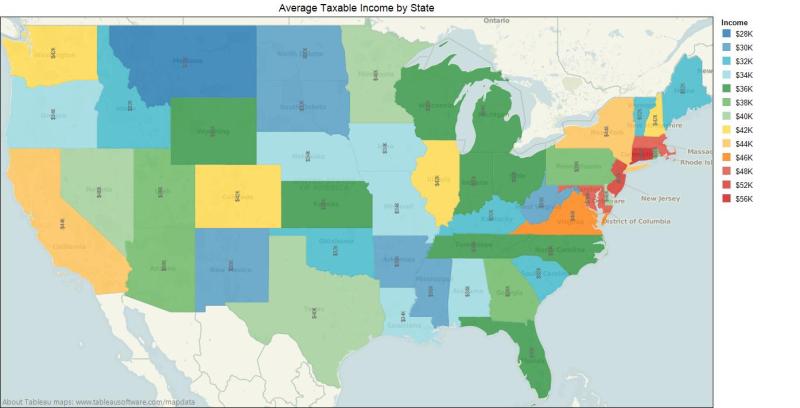

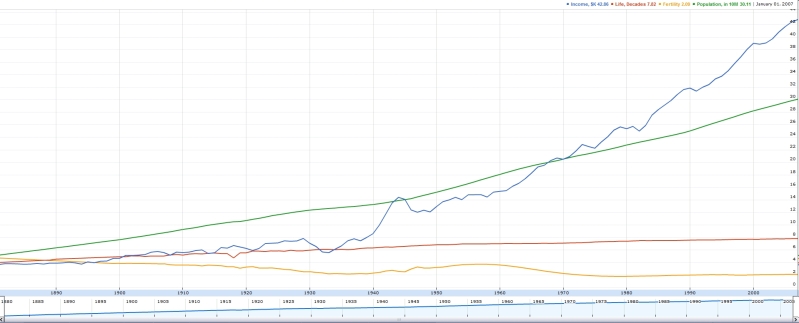

Inflation on USA for last 100 years

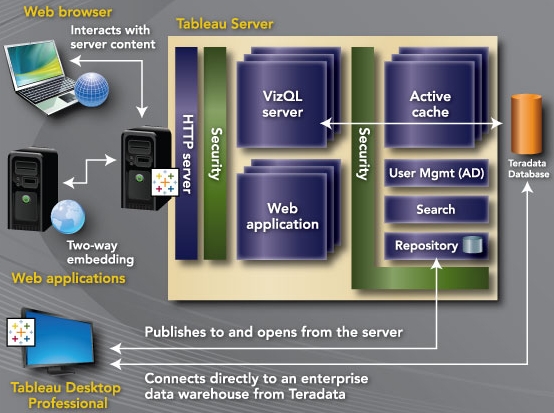

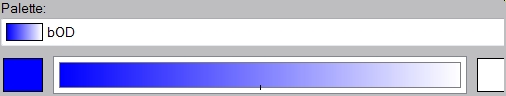

Tableau Server is in the Cloud

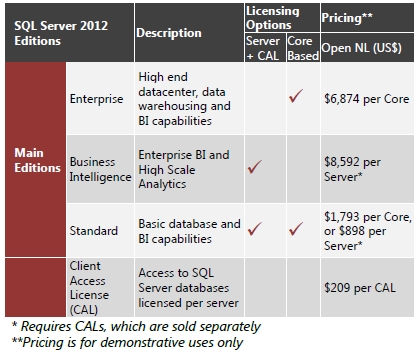

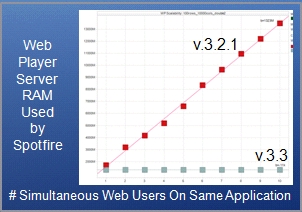

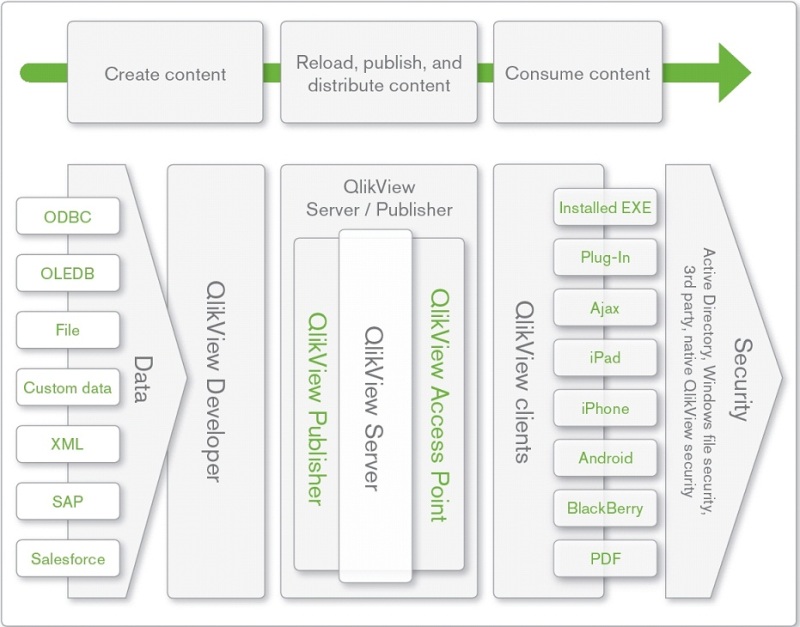

Competition in Data Visualization market is not only on features, market share and mindshare but also on pricing and lisensing. For example the Qlikview licensing and pricing is public for a while here: http://www.qlikview.com/us/explore/pricing and Spotfire Silver pricing public for a while too: https://silverspotfire.tibco.com/us/silver-spotfire-version-comparison .

Tableau Desktop has 3 editions: Public (Free), Personal ($999) and Professional ($1999), see it here: http://www.tableausoftware.com/public/comparison ; in addition you can have full Desktop (read-only) experience with free Tableau Reader (neither Qlikview nor Spotfire have free readers for server-less, unlimited distribution of Visualizations, which is making Tableau a mind-share leader right away…)

The release of Tableau Server online hosting this month: http://www.tableausoftware.com/about/press-releases/2013/tableau-unveils-cloud-business-intelligence-product-tableau-online heated the licensing competition and may force the large changes in licencing landscape for Data Visualization vendors. Tableau Server existed in the cloud for a while with tremendous success as Tableau Public (free) and Tableau Public Premium (former Tableau Digital with its weird pricing based on “impressions”).

But Tableau Online is much more disruptive for BI market: for $500/year you can get the complete Tableau Server site (administered by you!) in the cloud with (initially) 25 (it can grow) authenticated by you users and 100GB of cloud storage for your visualizations, which is 200 times more then you can get for $4500/year top-of-the line Spotfire Silver “Analyst account”. This Tableau Server site will be managed in the cloud by Tableau Software own experts and require nor IT personnel from your side! You may also compare it with http://www.rosslynanalytics.com/rapid-analytics-platform/applications/qlikview-ondemand .

A hosted by Tableau Software solution is particularly useful when sharing dashboards with customers and partners because the solution is secure but outside a company’s firewall. In the case of Tableau Online users can publish interactive dashboards to the web and share them with clients or partners without granting behind-the-firewall access.

Since Tableau 8 has new Data Extract API, you can do all data refreshes behind your own firewall and republish your TDE files in the cloud anytime (even automatically, on demand or on schedule) you need. Tableau Online has no minimum number of users and can scale as a company grows. At any point, a company can migrate to Tableau Server to manage it in-house. Here is some introductionla video about Tableau Online: Get started with Tableau Online.

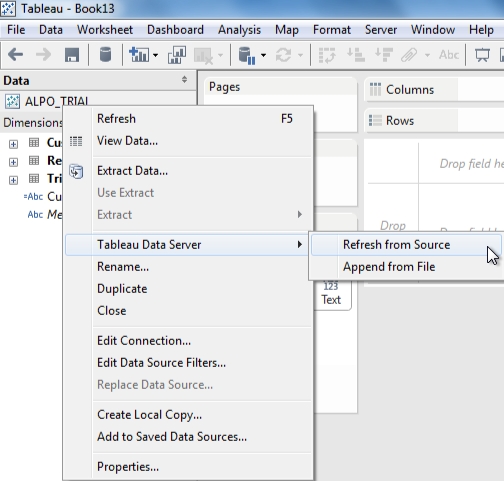

Tableau Server in the cloud provides at least 3 ways to update your data (more details see here: http://www.tableausoftware.com/learn/whitepapers/tableau-online-understanding-data-updates )

- republish Data Source or Workbook;

- Use Command-Line Tools to Schedule Batch Updates Locally and automatically, see more details here: http://onlinehelp.tableausoftware.com/current/pro/online/en-us/help.html#extracting_TDE.html;

- refresh the Tableau Server Data Source using your Tableau Desktop as a proxy between your on-premise Data Source and Tableau Online:

Here is another, more lengthy intro into Tableau BI in Cloud:

Tableau as a Service is a step in right direction, but be cautious: in practice, the architecture of the hosted version could impact performance. Plus, the nature of the product means that Tableau isn’t really able to offer features like pay-as-you-go that have made cloud-based software popular with workers. By their nature, data visualization products require access to data. For businesses that store their data internally, they must publish their data to Tableau’s servers. That can be a problem for businesses that have large amounts of data or that are prevented from shifting their data off premises for legal or security reasons. It could also create a synchronization nightmare, as workers play with data hosted at Tableau that may not be as up-to-date as internally stored data. Depending on the location of the customer relative to Tableau’s data center, data access could be slow.

And finally, the online version requires the desktop client, which costs $2,000. Tableau may implement Tableau desktop analytical features in a browser in the future while continue to support the desktop and on-premise model to meet security and regulations facing some customers.

3 oldest Charts: pie, line, bar

Drill-Down Demo, using Tableau Public

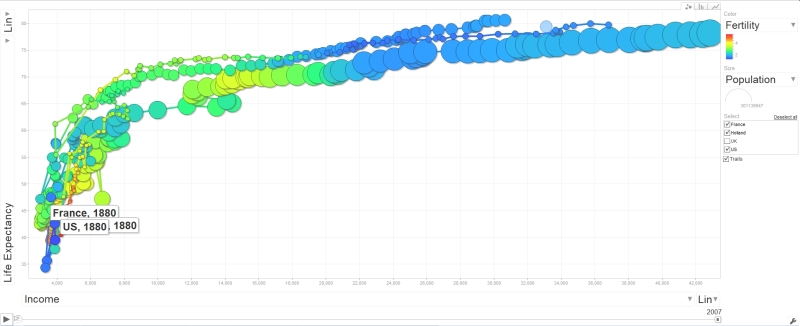

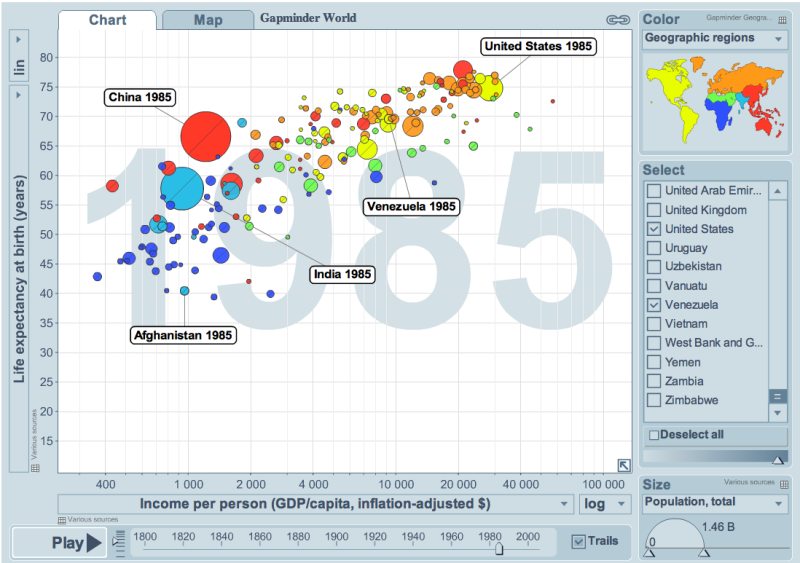

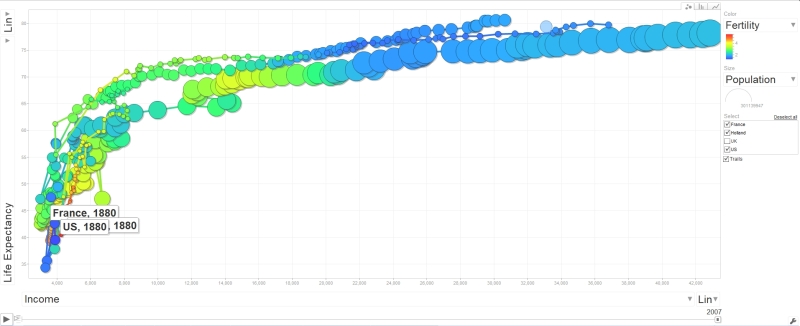

Motion Chart with Tableau

Motion Chart using Tableau; in browser you need to use vertical right bottom handle to see motion; to see automatic motion you need to download free Tableau Reader, then download workbook and open it with Tableau Reader:

http://public.tableausoftware.com/shared/NNP4TKRWB?:display_count=no

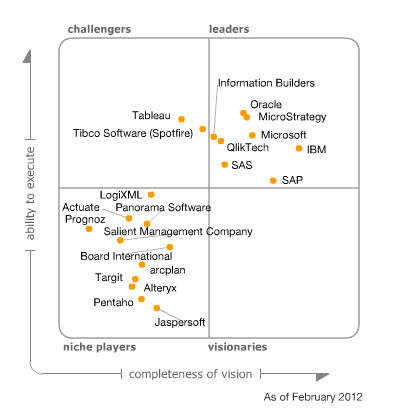

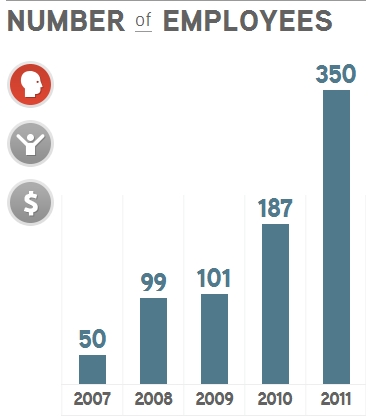

Time for Tableau is now: Part 2 – IPO

Tableau Software filed for IPO, on the New York Stock Exchange under the symbol “DATA”. In sharp contrast to other business-software makers that have gone public in the past year, Tableau is profitable, despite hiring huge number of new employees. For the years ended December 31, 2010, 2011 and 2012, Tableau’s total revenue were $34.2 million, $62.4 million and $127.7 million for 2012. Number of full-time employees increased from 188 as of December 31, 2010 to 749 as of December 31, 2012.

Tableau’s biggest shareholder is venture capital firm New Enterprise Associates, with a 38 percent stake. Founder Pat Hanrahan owns 18 percent, while co-founders Christopher Stolte and Christian Chabot, who is also chief executive officer, each own more than 15 percent. Meritech Capital Partners controls 6.4 percent. Tableau recognized three categories of Primary Competitors:

- large suppliers of traditional business intelligence products, like IBM, Microsoft, Oracle and SAP AG;

- spreadsheet software providers, such as Microsoft Corporation

- business analytics software companies: Qlik Technologies Inc. and TIBCO Spotfire.

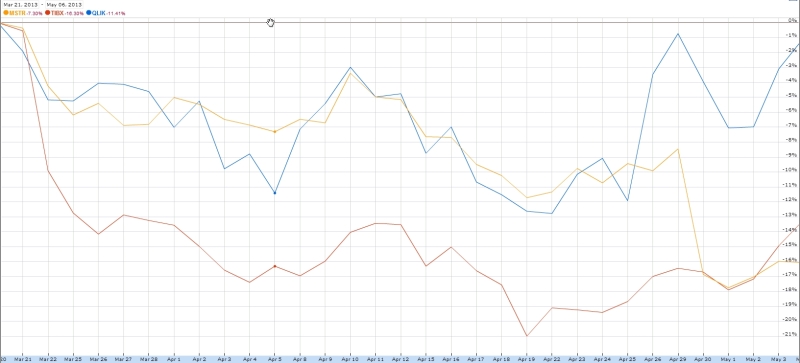

Update 4/29/13: This news maybe related to Tableau IPO: I understand that Microstrategy’s growth cannot be compared with growth of Tableau or even Qliktech. But to go below of the average “BI market” growth? Or even 6% or 24% decrease? What is going on (?) here : “First quarter 2013 revenues were $130.2 million versus $138.3 million for the first quarter of 2012, a 6% decrease. Product licenses revenues for the first quarter of 2013 were $28.4 million versus $37.5 million for the first quarter of 2012, a 24% decrease.”

Update 5/6/13: Tableau Software Inc. will sell 5 million shares, while shareholders will sell 2.2 million shares, Tableau said in an amended filing with the U.S. Securities and Exchange Commission. The underwriters have the option to purchase up to an additional 1,080,000 shares. It means total can be 8+ millions of shares for sale.

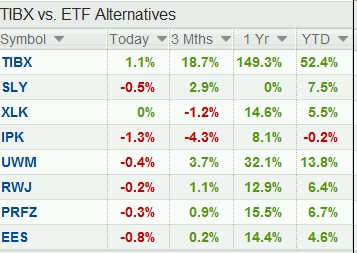

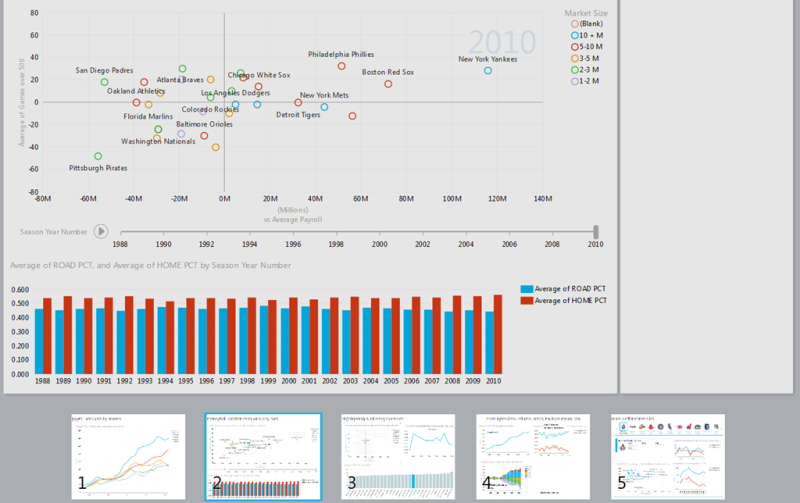

The company expects its initial public offer to raise up to $215.3 million at a price of $23 to $26 per share. If this happened, that will create public company with large capitalization, so Qliktech and Spotfire will have an additional problem to worry about. This is how QLIK (blue line), TIBX (red) and MSTR (orange line) stock behaved during last 6 weeks after release of Tableau 8 and official Tableau IPO announcement:

Update 5/16/13: According to this article at Seeking Alpha (also see S-1 Form) Tableau Software Inc. (symbol “DATA”) is scheduled a $176 million IPO with a market capitalization of $1.4 billion for Friday, May 17, 2013. Tableau’s March Quarter sales were up 60% from the March ’12 quarter. Qliktech’s sales were up only 23% on a similar comparative basis.

According to other article, Tableau raised it IPO price and it may reach capitalization of $2B by end of Friday, 5/17/13. That is almost comparable with capitalization of Qliktech…

Update 5/17/13: Tableau’s IPO offer price was $31 per share, but it started today

with price $47 and finished day with $50.75 (raising $400M in one day) with estimated Market Cap around $3B (or more?). It is hard to understand the market: Tableau Stock (symbol: DATA) finished its first day above $50 with Market Capitalization higher than QLIK, which today has Cap = $2.7B but Qliktech has almost 3 times more of sales then Tableau!

For comparison MSTR today has Cap = $1.08B and TIBX today has Cap = $3.59B. While I like Tableau, today proved that most investors are crazy, if you compare numbers in this simple table:

| Symbol : | Market Cap, $B, as of 5/17/13 | Revenue, $M, as of 3/31/13 (trailing 12 months) | FTE (Full Time Employees) |

| TIBX | 3.59 | 1040 | 3646 |

| MSTR | 1.08 | 586 | 3172 |

| QLIK | 2.67 | 406 | 1425 |

| DATA | between $2B and $3B? | 143 | 834 |

See interview with Co-Founder of Tableau Software Christian Chabot – he discusses taking the company’s IPO with Emily Chang on Bloomberg Television’s “Bloomberg West.” However it makes me sad when Tableau’s CEO is implying that Tableau is ready for big data, which is not true.

Here are some pictures of the Tableau team at the NYSE: http://www.tableausoftware.com/ipo-photos and here is the announcement about “closing IPO”.

Here are some pictures of the Tableau team at the NYSE: http://www.tableausoftware.com/ipo-photos and here is the announcement about “closing IPO”.

Showing 1000 marks versus 42000 marks in Tableau 8

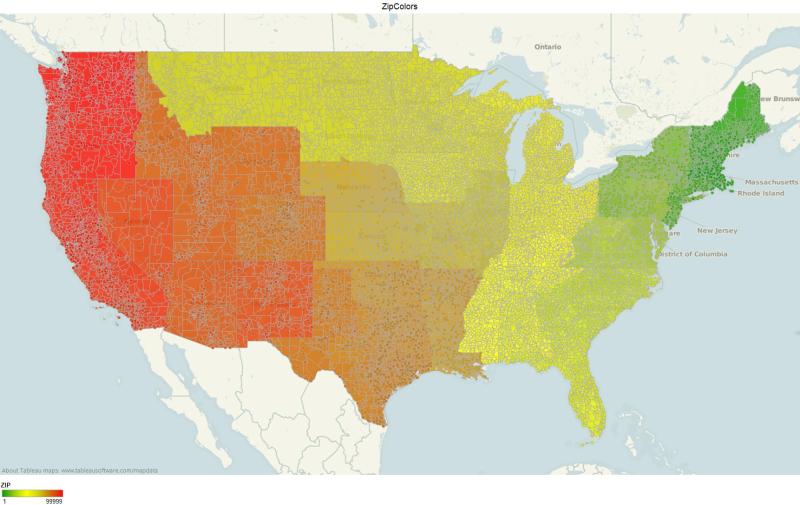

This DataView with all USA Counties can take advantage of Local Rendering (if you have modern Web Browser), because Tableau 8 will switch to it in case if DataView has less then 5000 marks (datapoints):

http://public.tableausoftware.com/views/Zips_0/CountyColors?:embed=y&:display_count=yes

But this DataView with all USA ZIP code areas will be Rendered by Tableau Server, because Tableau 8 will use server-side rendering in case if DataView has more then then 5000 marks (datapoints):

http://public.tableausoftware.com/views/Zips_0/ZipColors?:embed=y&:display_count=yes

For explantion please read this:

http://onlinehelp.tableausoftware.com/current/server/en-us/browser_rendering.htm

Time for Tableau is now…

Today Tableau 8 was released with 90+ new features (actually it may be more than 130) after exhausting 6+ months of Alpha and Beta Testing with 3900+ customers as Beta Testers! I personally expected it it 2 months ago, but I rather will have it with less bugs and this is why I have no problem with delay. During this “delay” Tableau Public achieved the phenomenal Milestone: 100 millions of users…

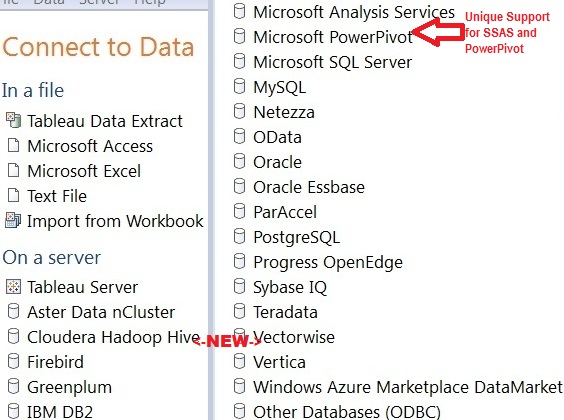

Tableau 8 introduced:

- web and mobile authoring,

- added access to new data sources: Google Analytics, Salesforce.com, Cloudera Impala, DataStax Enterprise, Hadapt, Hortonworks Hadoop Hive, SAP HANA, and Amazon Redshift.

- New Data Extract API that allows programmers to load data from anywhere into Tableau and make certain parts of Tableau Licensing ridiculous, because consuming part of licensing (for example core licensing) for background tasks should be set free now.

- New JavaScript API enables integration with business (and other web-) applications.

- Local Rendering: leveraging the graphics hardware acceleration available on ordinary computers. Tableau 8 Server dynamically determines where rendering will complete faster – on the server or in the browser. Also – and acts accordingly. Also Dashboards now render views in parallel when possible.

Tableau Software plans to add in next versions (after 8.0) some very interesting and competitive features, like:

- Direct query of large databases, quick and easy ETL and data integration.

- Tableau on a Mac and Tableau as a pure Cloud offering.

- Make statistical & analytical techniques accessible (I wonder if it means integration with R?).

- Tableau founder Pat Hanrahan recently talked about “Showing is Not Explaining”, so Tableau planned to add features that support storytelling by constructing visual narratives and effective communication of ideas.

I did not see on Tableau’s roadmap some very long overdue features like 64-bit implementation (currently even all Tableau Server processes, except one, are 32-bit!), Server implementation on Linux (we do not want to pay Windows 2012 Server CAL taxes to Bill Gates) and direct mentioning of integration with R like Spotfire does – I how those planning and strategic mistakes will not impact upcoming IPO.

I personally think that Tableau has to stop using its ridiculous practice when 1 core license used per each 1 Backgrounder server process and since Tableau Data Extract API is free so all Tableau Backgrounder Processes should be free and have to be able to run on any hardware and even any OS.

Tableau 8 managed to get the negative feedback from famous Stephen Few, who questioned Tableau’s ability to stay on course. His unusually long blog-post “Tableau Veers from the Path” attracted enormous amount of comments from all kind of Tableau experts. I will be cynical here and notice that there is no such thing as negative publicity and more publicity is better for upcoming Tableau IPO.

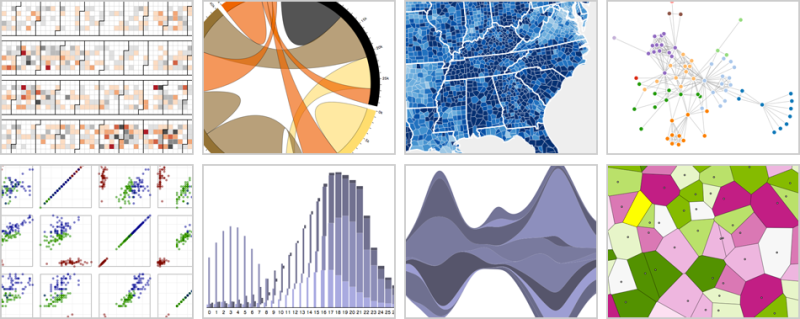

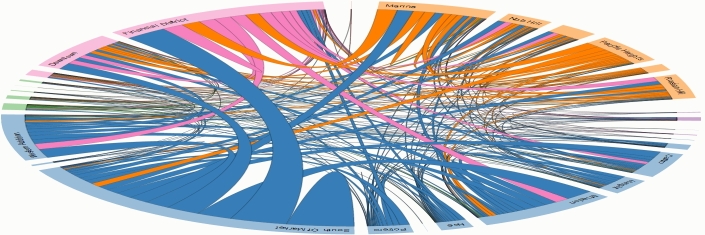

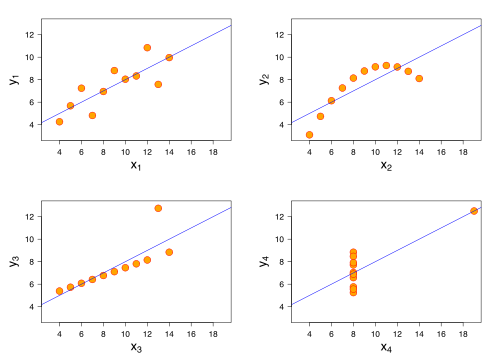

to DV or to D3 – that is the question

The most popular (among business users) approach to visualization is to use a Data Visualization (DV) tool like Tableau (or Qlikview or Spotfire), where a lot of features already implemented for you. Recent prove of this amazing popularity is that at least 100 million people (as of February 2013), used Tableau Public as their Data Visualization tool of choice, see

http://www.tableausoftware.com/about/blog/2013/2/crossing-100-million-milestone-21304

However, to make your documents and stories (and not just your data visualization applications) driven by your data, you may need the other approach – to code visualization of your data into your story and visualization libraries like popular D3 toolkit can help you. D3 stands for “Data-Driven Documents”. The Author of D3 Mr. Mike Bostock designs interactive graphics for New York Times – one of latest samples is here:

and NYT allows him to do a lot of Open Source work which he demonstartes at his website here:

https://github.com/mbostock/d3/wiki/Gallery .

Mike was a “visualization scientist” and a computer science PhD student at #Stanford University and member of famous group of people, now called “Stanford Visualization Group”:

http://vis.stanford.edu/people/

This Visualization Group was a birthplace of Tableau’s prototype – sometimes they called it “a Visual Interface” for exploring data and other name for it is Polaris:

http://www.graphics.stanford.edu/projects/polaris/

and we know that creators of Polaris started Tableau Software. One of other Group’s popular “products” was a graphical toolkit (mostly in JavaScript, as oppose to Polaris, written in C++) for Visualization, called ProtoVis:

http://mbostock.github.com/protovis/

– and Mike Bostock was one of ProtoViz’s main co-authors. Less then 2 years ago Visualization Group suddenly stopped developing ProtoViz and recommended to everybody to switch to D3 library

authored by Mike. This library is Open Source (only 100KB in ZIP format) and can be downloaded from here:

In order to use D3, you need to be comfortable with HTML, CSS, SVG, Javascript programming, DOM (and other Web Standards); understanding of jQuery paradigm will be useful too. Basically if you want to be at least partially as good as Mike Bostock, you need to have a mindset of a programmer (I guess in addition to business user mindset), like this D3 expert:

Most of successful early D3 adopters combining even 3+ mindsets: programmer, business analyst, data artist and even sometimes data storyteller. For your programmer’s mindset you may be interested to know that D3 has a large set of Plugins, see:

https://github.com/d3/d3-plugins

and rich #API, see https://github.com/mbostock/d3/wiki/API-Reference

You can find hundreds of D3 demos, samples, examples, tools, products and even a few companies using D3 here: https://github.com/mbostock/d3/wiki/Gallery

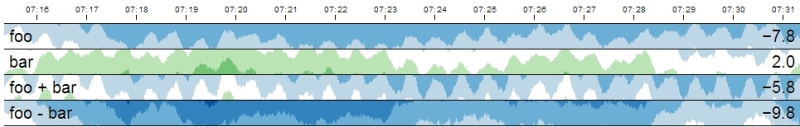

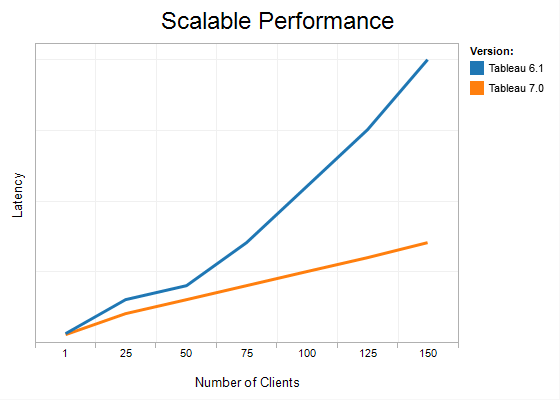

5000 Points: Local Rendering is here

Human eye cannot process effectively more than a few (thousands) datapoints per View.

Additionally, in Data Visualization you have other restrictions:

- number of pixels on your screen (may be 2-3 millions maximum) available for your View (Chart or Dashboard).

- time to render millions of Datapoints can be too long and may create a bad User Experience (too much waiting).

- time to load your Datapoints into your View; if you wish to have a good User Experience, than 2-3 seconds is maximum user can wait for. If you have a live connection to datasource, than 2-3 seconds mean a few thousands of Datapoints maximum.

- again, more Datapoints you will put in your View, more crowded it will be and less useful and less understandable your View will be for your users.

Recently, some Vendors started to add new reason for you (called Local Rendering) to restrict yourself in terms of how much of Datapoints you need to put into your DataView: usage of Client-side hardware (especially its Graphical Hardware) for so called “Local Rendering”.

Local rendering means that Data Visualization Server will send DataPoints instead of Images to Client and Rendering of Image will happened on Client-side, using capability of modern Web Browsers (to use Client’s Hardware) and HTML5 Canvas technology.

For example, the new feature in Tableau Server 8 will automatically switch to Local Rendering if number of DataPoints in your DataView (Worksheet with your Chart or Dashboard) is less then 5000 DataPoints (Marks in Tableau Speak). In addition to faster rendering it means less round-trips to Server (for example when you hover your mouse over Datapoint, in old world it means round-trip to Server) and faster Drill-down, Selection and Filtering operations.

Update 3/19/13: James Baker from Tableau Software explains why Tableau 8 Dashboards in Web Browser feel more responsive:

http://www.tableausoftware.com/about/blog/2013/3/quiet-revolution-rendering-21874

James explained that “HTML5’s canvas element” is used as drawing surface. He underscored that it’s much faster to send images rather than data because image size does not scale up linearly. James included a short video shows incremental filtering in a browser, one of the features of Local Rendering.

DV Album @Picasa

Tableau Readings, January 2013

Best of the Tableau Web… December 2012:

http://www.tableausoftware.com/about/blog/2013/1/best-tableau-web-december-2012-20758

Top 100 Q4 2012 from Tableau Public:

http://www.tableausoftware.com/public/blog/2013/01/top-100-q4-2012-1765

eBay’s usage of Tableau as the front-end for big data, Teradata and Hadoop with 52 petabytes of

data on everything from user behavior to online transactions to customer shipments and much more:

http://www.infoworld.com/d/big-data/big-data-visualization-big-deal-ebay-208589

Why The Information Lab recommends Tableau Software:

http://www.theinformationlab.co.uk/2013/01/04/recommend-tableau-software/

Fun with #Tableau Treemap Visualizations

http://tableaulove.tumblr.com/post/40257187402/fun-with-tableau-treemap-visualizations

Talk slides: Tableau, SeaVis meetup & Facebook, Andy Kirk’s Facebook Talk from Andy Kirk

http://www.visualisingdata.com/index.php/2013/01/talk-slides-tableau-seavis-meetup-facebook/

Usage of RAM, Disk and Data Extracts with Tableau Data Engine:

http://www.tableausoftware.com/about/blog/2013/1/what%E2%80%99s-better-big-data-analytics-

memory-or-disk-20904

Migrating Tableau Server to a New Domain

https://www.interworks.com/blogs/bsullins/2013/01/11/migrating-tableau-server-new-domain

SAS/Tableau Integration

http://www.see-change.co/services/sastableau-integration/

IFNULL – is not “IF NULL”, is “IF NOT NULL”

http://tableaufriction.blogspot.com/2012/09/isnull-is-not-is-null-is-is-not-null.html

Worksheet and Dashboard Menu Improvements in Tableau 8:

http://tableaufriction.blogspot.com/2013/01/tv8-worksheet-and-dashboard-menu.html

Jittery Charts – Why They Dance and How to Stop Them:

http://tableaufriction.blogspot.com/2013/01/jittery-charts-and-how-to-fix-them.html

Tableau Forums Digest #8

http://shawnwallwork.wordpress.com/2013/01/06/67/

Tableau Forums Digest #9

http://shawnwallwork.wordpress.com/2013/01/14/tableau-forums-digest-9/

Tableau Forums Digest #10

http://shawnwallwork.wordpress.com/2013/01/19/tableau-forums-digest-10/

Tableau Forums Digest #11

http://shawnwallwork.wordpress.com/2013/01/26/tableau-forums-digest-11/

implementation of bandlines in Tableau by Jim Wahl (+ Workbook):

http://community.tableausoftware.com/message/198511

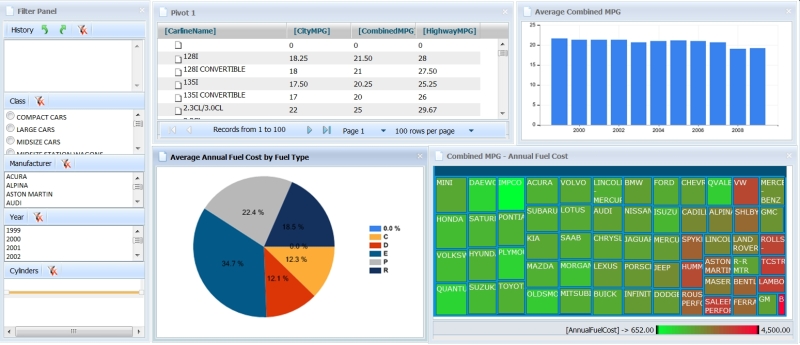

Advizor Visual Discovery, Part 2

This is the Part 2 of the guest blog post: the Review of Visual Discovery products from Advizor Solutions, Inc., written by my guest blogger Mr. Srini Bezwada (his profile is here: http://www.linkedin.com/profile/view?id=15840828 ), who is the Director of Smart Analytics, a Sydney based professional BI consulting firm that specializes in Data Visualization solutions. Opinions below belong to Mr. Srini Bezwada.

ADVIZOR Technology

ADVIZOR’s Visual Discovery™ software is built upon strong data visualization tools technology spun out of a distinguished research heritage at Bell Labs that spans nearly two decades and produced over 20 patents. Formed in 2003, ADVIZOR has succeeded in combining its world-leading data visualization and in-memory-data-management expertise with extensive usability knowledge and cutting-edge predictive analytics to produce an easy to use, point and click product suite for business analysis.

ADVIZOR readily adapts to business needs without programming and without implementing a new BI platform, leverages existing databases and warehouses, and does not force customers to build a difficult, time consuming, and resource intensive custom application. Time to deployment is fast, and value is high.

With ADVIZOR data is loaded into a “Data Pool” in main memory on a desktop or laptop computer, or server. This enables sub-second response time on any query against any attribute in any table, and instantaneously update all visualizations. Multiple tables of data are easily imported from a variety of sources.

With ADVIZOR, there is no need to pre-configure data. ADVIZOR accesses data “as is” from various data sources, and links and joins the necessary tables within the software application itself. In addition, ADVIZOR includes an Expression Builder that can perform a variety of numeric, string, and logical calculations as well as parse dates and roll-up tables – all in-memory. In essence, ADVIZOR acts like a data warehouse, without the complexity, time, or expense required to implement a data warehouse! If a data warehouse already exists, ADVIZOR will provide the front-end interface to leverage the investment and turn data into insight.

Data in the memory pool can be refreshed from the core databases / data sources “on demand”, or at specific time intervals, or by an event trigger. In most production deployments data is refreshed daily from the source systems.

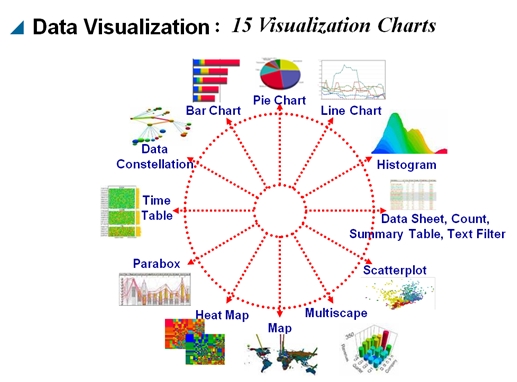

Data Visualization

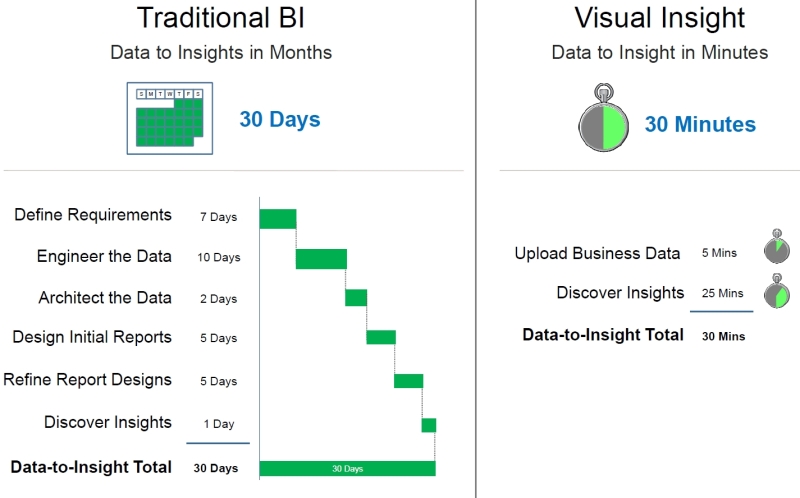

ADVIZOR’s Visual Discovery™ is a full visual query and analysis system that combines the excitement of presentation graphics – used to see patterns and trends and identify anomalies in order to understand “what” is happening – with the ability to probe, drill-down, filter, and manipulate the displayed data in order to answer the “why” questions. Conventional BI approaches (pre-dating the era of interactive Data Visualization) to making sense of data have involved manipulating text displays such as cross tabs, running complex statistical packages, and assembling the results into reports.

ADVIZOR’s Visual Discovery™ making the text and graphics interactive. Not only can the user gain insight from the visual representation of the data, but now additional insight can be obtained by interacting with the data in any of ADVIZOR’s fifteen (15) interactive charts, using color, selection, filtering, focus, viewpoint (panning, zooming), labeling, highlighting, drill-down, re-ordering, and aggregation.

Visual Discovery empowers the user to leverage his or her own knowledge and intuition to search for patterns, identify outliers, pose questions and find answers, all at the click of a mouse.

Flight Recorder – Track, Save, Replay your Analysis Steps

The Flight Recorder tracks each step in a selection and analysis process. It provides a record of those steps, and be used to repeat previous actions. This is critical for providing context to what and end-user has done and where they are in their data. Flight records also allow setting bookmarks, and can be saved and shared with other ADVIZOR users.

The Flight Recorder is unique to ADVIZOR. It provides:

• A record of what a user has done. Actions taken and selections from charts are listed. Small images of charts that have been used for selection show the selections that were made.

• A place to collect observations by adding notes and capturing images of other charts that illustrate observations.

• A tool that can repeat previous actions, in the same session on the same data or in a later session with updated data.

• The ability to save and name bookmarks, and share them with other users.

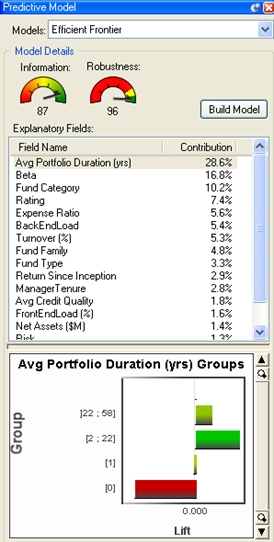

Predictive Analytics Capability

The ADVIZOR Analyst/X is a predictive analytic solution based on a robust multivariate regression algorithm developed by KXEN – a leading-edge advanced data mining tool that models data easily and rapidly while maintaining relevant and readily interpretable results.

Visualization empowers the analyst to discover patterns and anomalies in data by noticing unexpected relationships or by actively searching. Predictive analytics (sometimes called “data mining”) provides a powerful adjunct to this: algorithms are used to find relationships in data, and these relationships can be used with new data to “score” or “predict” results.

Predictive analytics software from ADVIZOR don’t require enterprises to purchase platforms. And, since all the data is in-memory, the Business Analyst can quickly and easily condition data and flag fields across multiple tables without having to go back to IT or a DBA to prep database tables. The interface is entirely point-and-click, there are no scripts to write. The biggest benefit from the multi-dimensional visual solution is how quickly it delivers analysis, solving critical business questions, facilitating intelligence-driven decision making, providing instant answers to “what if?” questions.

Advantages over Competitors:

• The only product in the market offering a combination of Predictive Analytics + Data Visualisation + In memory data management within one Application.

• The cost of entry is lower than the market leading data visualization vendors for desktop and server deployments.

• Advanced Visualizations like Parabox, Network Constellation in addition to normal bar charts, scatter plots, line charts, Pie charts…

• Integration with leading CRM vendors like Salesforce.com, Blackbaud, Ellucian, Information Builder

• Ability to provide sub-second response time on query against any attribute in any table, and instantaneously update all visualizations.

• Flight recorder that lets you track, replay, and save your analysis steps for reuse by yourself or others.

Update on 5/1/13 (by Andrei): Avizor 6.0 is available now with substantial enhancements: http://www.advizorsolutions.com/Bnews/tabid/56/EntryId/215/ADVIZOR-60-Now-Available-Data-Discovery-and-Analysis-Software-Keeps-Getting-Better-and-Better.aspx

Advizor Visual Discovery, Part 1

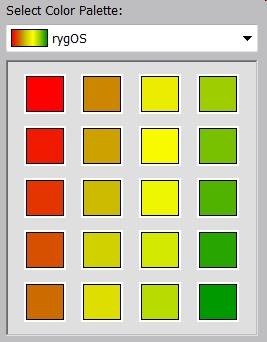

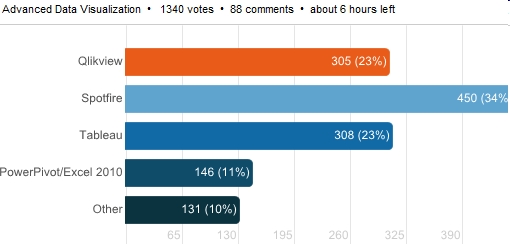

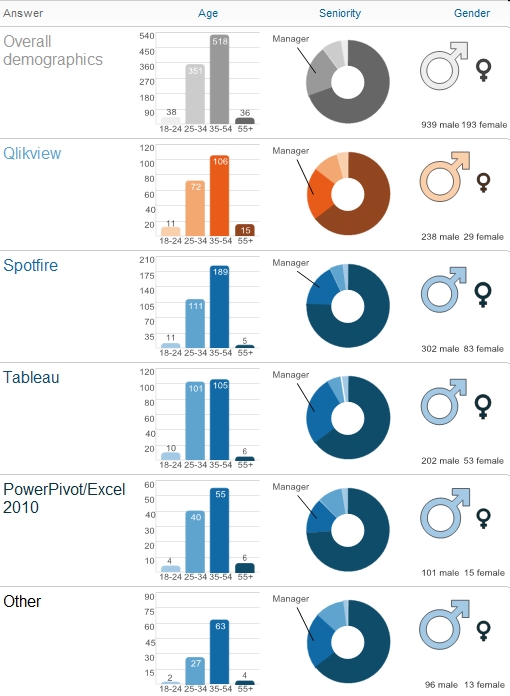

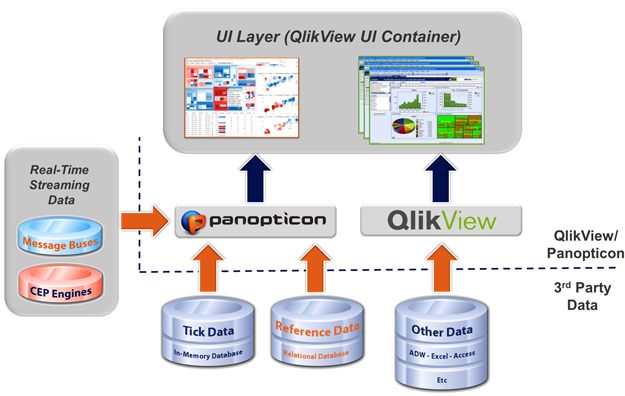

If you visited my blog before, you know that my classification of Data Visualization and BI vendors are different from researchers like Gartner. In addition to 3 DV Leaders – Qlikview, Tableau, Spotfire – I rarely have time to talk about other “me too” vendors.

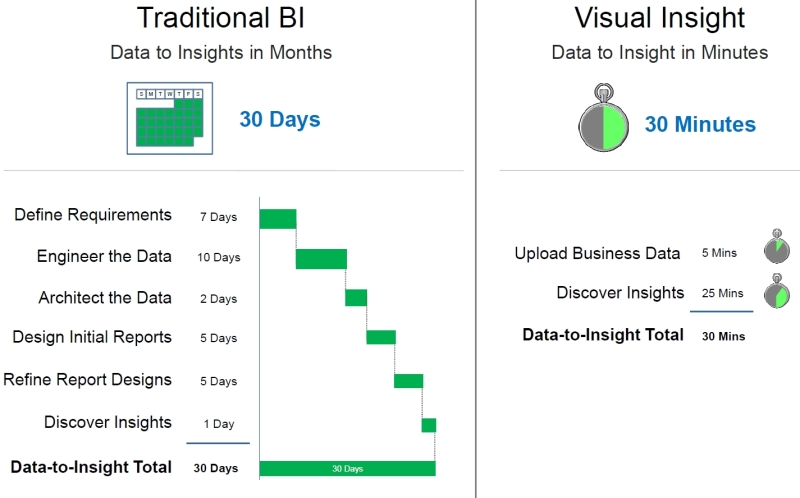

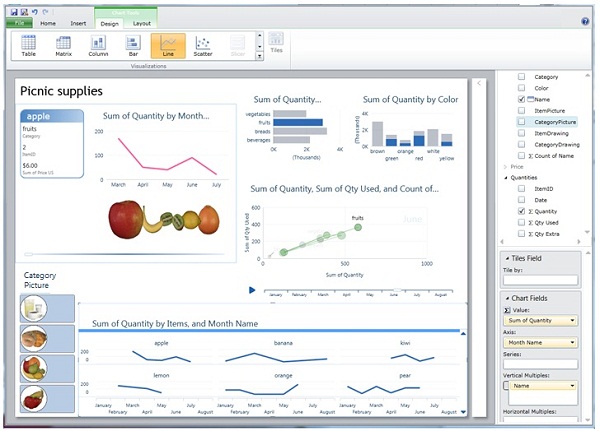

However, sometimes products like Omniscope, Microstrategy’s Visual Insight, Microsoft BI Stack (Power View, PowerPivot, Excel 2013, SQL Server 2012, SSAS etc.), Advizor, SpreadshetWEB etc. deserve attention too. However, it takes so much time, so I am trying to find guest bloggers to cover topics like that. 7 months ago I invited volunteers to do some guest blogging about Advizor Visual Discovery Products:

http://apandre.wordpress.com/2012/06/22/advizor-analyst-vs-tableau-or-qlikview/

So far nobody in USA or Europe committed to do so, but recently Mr. Srini Bezwada, Certified Tableau Consultant and Advizor-trained expert from Australia contacted me and submitted the article about it. He also provided me with info about how Advizor can be compared with Tableau, so I will do it briefly, using his data and opinions. Mr. Bezwada can be reached at

sbezwada@smartanalytics.com.au , where he is a director at

http://www.smartanalytics.com.au/

Below is quick comparison of Advizor with Tableau. Opinions below belong to Mr. Srini Bezwada. Next blog post will be a continuation of this article about Advizor Solutions Products, see also Advizor’s website here:

http://www.advizorsolutions.com/products/

| Criteria | Tableau | ADVIZOR | Comment |

| Time to implement | Very Fast | Fast, ADVIZOR can be implemented within Days | Tableau Leads |

| Scalability | Very Good | Very Good | Tableau: virtual RAM |

| Desktop License | $1,999 | $ 1,999 | $3,999 for AnalystX with Predictive modeling |

| Server License/user | $1K, min 10 users, 299 K for Enterprise | Deployment license for up to 10 named users $8 K | ADVIZOR is a lot cheaper for Enterprise Deployment $75 K for 500 Users |

| Support fees / year |

20% |

20% |

1st year included |

| SaaS Platform | Core or Digital | Offers Managed Hosting | ADVIZOR Leads |

| Overall Cost | Above Average | Competitive | ADVIZOR Costs Less |

| Enterprise Ready | Good for SMB | Cheaper cost model for SMB | Tableau is expensive for Enterprise Deployment |

| Long-term viability | Fastest growth | Private company since 2003. | Tableau is going IPO in 2013 |

| Mindshare | Tableau Public | Growing Fast | Tableau stands out |

| Big Data Support | Good | Good | Tableau is 32-bit |

| Partner Network | Good | Limited Partnerships | Tableau Leads |

| Data Interactivity | Excellent | Excellent | |

| Visual Drilldown | Very Good | Very Good | |

| Offline Viewer | Free Reader | None | Tableau stands out |

| Analyst’s Desktop | Tableau Professional | Advizor has Predictive Modeling | ADVIZOR is a Value for Money |

| Dashboard Support | Excellent | Very Good | Tableau Leads |

| Web Client | Very Good | Good | Tableau Leads |

| 64-bit Desktop | None | Very Good | Tableau still a 32-bit app |

| Mobile Clients | Very Good | Very Good | |

| Visual Controls | Very Good | Very Good | |

| Data Integration | Excellent | Very Good | Tableau Leads |

| Development | Tableau Pro | ADVIZOR Analyst | |

| 64-bit in-RAM DB | Good | Excellent | Advizor Leads |

| Mapping support | Excellent | Average | Tableau stands out |

| Modeling, Analytics | Below Average | Advanced Predictive Modelling | ADVIZOR stands out |

| Predictive Modeling | None | Advanced Predictive Modeling Capability with Built in KXEN algorithms | ADVIZOR stands out |

| Flight Recorder | None | Flight recorder lets you track, replay, save your analysis steps for reuse by yourself or others. | ADVIZOR stands out |

| Visualization | 22 Chart types | All common charts like bar charts, scatter plots, line charts, Pie charts are supported | Advizor has Advanced Visualizations like Parabox, Network Constellation |

| Third party integration | Many Data Connectors, see Tableau’s drivers page | ADVIZOR integrates well with CRM software: Salesforce.com, Ellucian, Blackbaud and others. | ADVIZOR leads in CRM area |

| Training | Free Online and paid Classroom | Free Online and paid via company trainers & Partners | Tableau Leads |

Happy New 2013!

My best wishes for 2013!

2012 was extraordinary for Data Visualization community and I expect 2013 will be even more interesting than 2012. For Data Visualization vendors 2012 was unusual YEAR and surprised many people.

We can start with Qliktech, which grew only about 18% in 2012 (while in 2011 it was 42% and in 2010 it was 44%) and QLIK stock lost a lot… Spotfire on other hand grew faster then that and Tableau grew even faster than Spotfire. Tableau doubled its workforce and its sales now more than $100M per year. Together the sales of Qlikview, Spotfire and Tableau totaled to almost $600M in 2012 and I expect it may reach even $800M in 2013. All other vendors becoming less and less visible on market. While it is still possible to have a breakthrough from companies like Microsoft, Microstrategy, Visokio and Pagos, it is highly unlikely.

If you will search in web for wishes or wishlists for Qlikview or Tableau or Spotfire, you can find plenty of wishes, including even very technical. I will partially repeat myself, because some of my best wishes are still wishes and may be some of them will be never implemented. I will restrict myself to 3 best wishes per vendor.

Let me start with Spotfire, as the most mature product. I will use analogy: EMC did spin-off VMWare and (today) market capitalization of VMWare is close $40B, about 75% (!) of Market Capitalization of its parent company EMC! I wish that TIBCO will do the same to Spotfire as EMC did to VMWare. Compare with this wish all other wishes look minimal, like making Free Spotfire Desktop Reader (similar to what Tableau has) and make part of Spotfire Silver is completely Public and Free similar to … Tableau Public.

For Qliktech I really wish them to stop bleeding capitalization-wise (did they lost $1B of MktCap during last 9 months?) and sales-wise (growing only 18% in 2012 compare with 42% in 2011). May be 2013 is good time for IBM to buy Qliktech? And yes, I wish Qlikview Server on Linux (I do not like new licensing terms of Windows 2012 Server) and I wish (for many years!) free Qlikview Desktop Viewer/Reader (similar … to Tableau Reader) in 2013 to enable server-less distribution of Qlikview-based Data Visualizations!

For Tableau I wish a very successful IPO in 2013 and I wish them to grow in 2013 as fast as they did in 2012! I really wish Tableau (and all its processes like VizQL, Application Server, Backgrounder etc.) to became 64-bit in 2013 and of course I wish Tableau Server on Linux (see my wish for Qlikview above).

Since I still have my best wishes for Microsoft (I guess they will never listen me anyway), I wish them to stop in 2013 using the dead product (Silverlight) with Power View (just complete the switch to HTML5 already), to make it completely separate from SharePoint and make it equal part of Office (integrated with PowerPivot on Desktop) the same way as Visio and Access are parts of Office and as a result I wish Microsoft to have a Power View (Data Visualization) Server (integrated with SQL Server 2012 of course) as well.

Also here are Flags of 21 countries from where this blog got most visitors in 2012:

Data Visualization set on Flickr

https://plus.google.com/photos/113652689655125910498/albums/5481981245951662737?banner=pwa

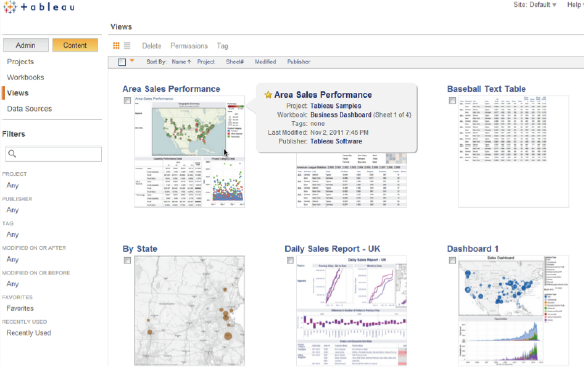

New Tableau 8 Server features

In my previous post http://apandre.wordpress.com/2012/11/16/new-tableau-8-desktop-features/ (this post is the continuation of it) , I said that Tableau 8 introduced 130+ new features, 3 times more then Tableau 7 did. Many of these new features are in Tableau 8 Server and this post about those new Server features (this is a repost from my Tableau blog: http://tableau7.wordpress.com/2012/11/30/new-tableau-8-server-features/ ).

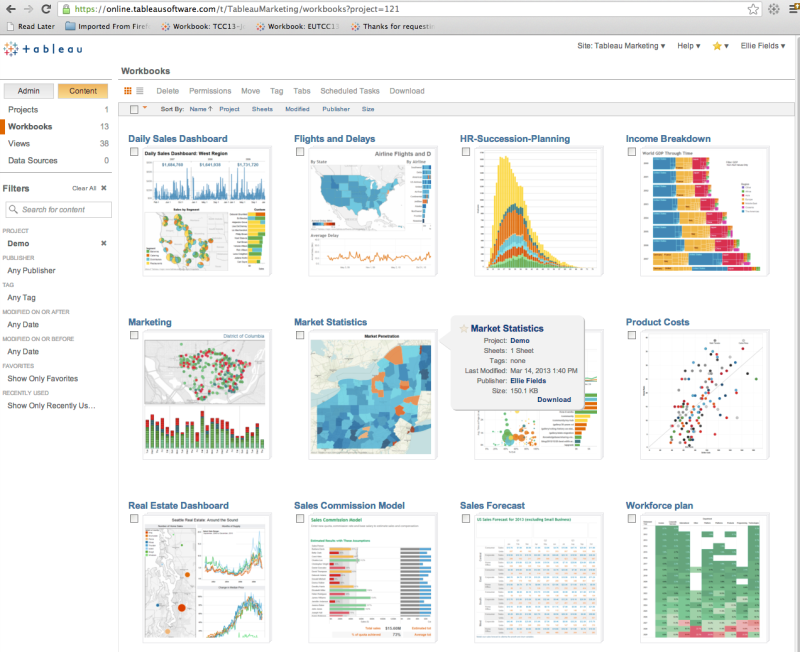

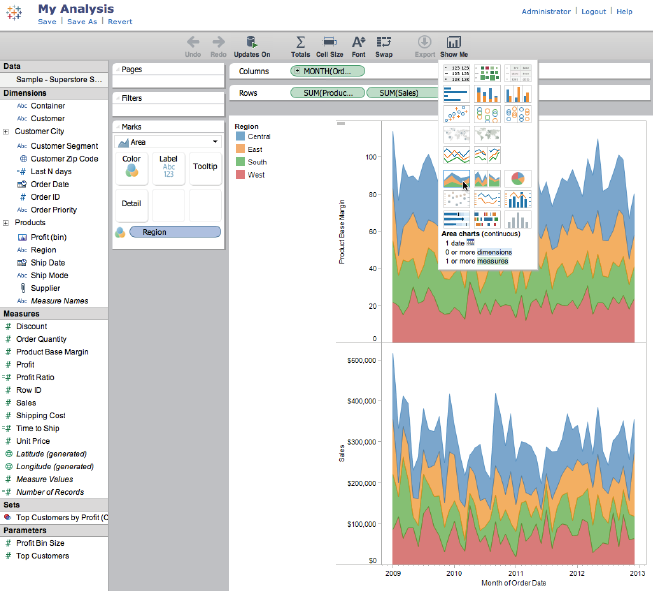

The Admin and Server pages have been redesigned to show more info quicker. In list view the columns can be resized. In thumbnail view the grid dynamically resizes. You can hover over a thumbnail to see more info about visualization. The content search is better too:

Web authoring (even mobile) introduced by Tableau 8 Server. Change dimensions, measures, mark types, add filters, and use Show Me are all directly in a web browser and can be saved back to the server as a new workbook or if individual permissions allow, to the original workbook:

Subscribing to a workbook or worksheet will automatically notify about the dashboard or view updates to your email inbox. Subscriptions deliver image and link.

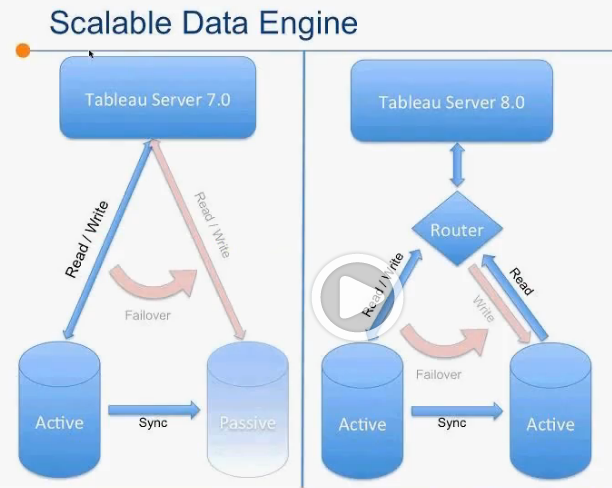

Tableau 8 Data Engine is more scalable now, it can be distributed between 2 nodes, 2nd instance of it now can be configured as Active, Synced and Available for reading if Tableau Router decided to use it (in addition Fail-over function as before) Tableau 8 Server now supports Local Rendering, using graphic ability of local devices, modern browsers and HTML5. No-round-trip to server while rendering using latest versions of chrome 23+, Firefox 17+, Safari , IE 9+. Tableau 8 (both Server and Desktop, computing each view in Parallel. PDF files, generated by Tableau 8 up to 90% smaller and searchable. And Performance Recorder works on both Server and Desktop.

Tableau 8 Server now supports Local Rendering, using graphic ability of local devices, modern browsers and HTML5. No-round-trip to server while rendering using latest versions of chrome 23+, Firefox 17+, Safari , IE 9+. Tableau 8 (both Server and Desktop, computing each view in Parallel. PDF files, generated by Tableau 8 up to 90% smaller and searchable. And Performance Recorder works on both Server and Desktop.

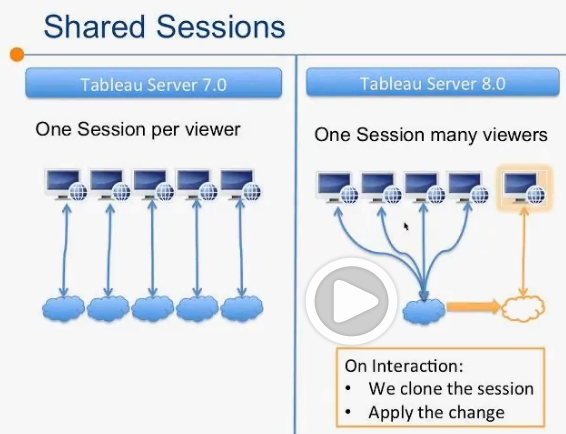

Tableau 8 Server introducing Shared sessions allows more concurrency, more caching. Tableau 7 uses 1 session per viewer. Tableau 8 using one session per many viewers, as long as they do no change state of filters and don’t do other altering interaction. If interaction happened, Tableau 8 will clone the session for appropriate Interactor and apply his/her changes to new session: Finally Tableau getting API, 1st part of it I described in previous blog post about TDesktop – TDE API (C/C++, Python, Java on both Windows AND Linux!).

Finally Tableau getting API, 1st part of it I described in previous blog post about TDesktop – TDE API (C/C++, Python, Java on both Windows AND Linux!).

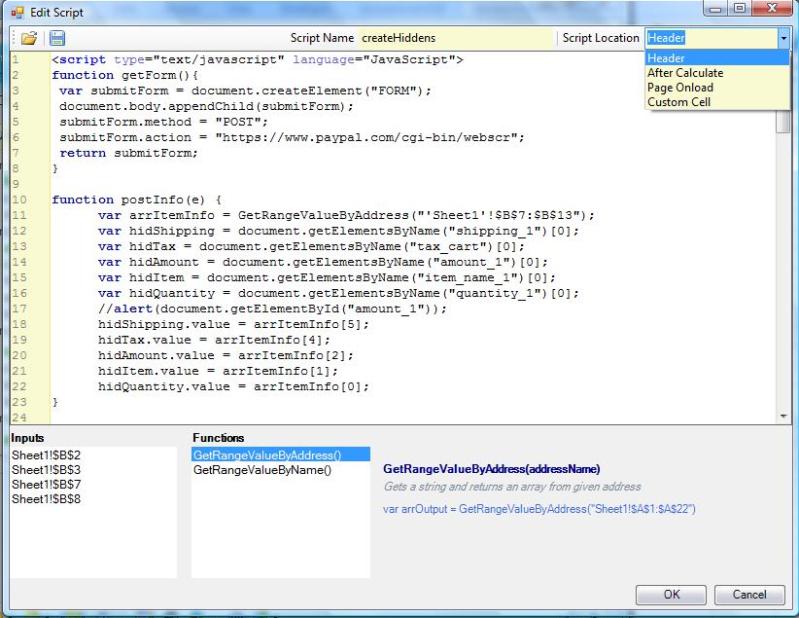

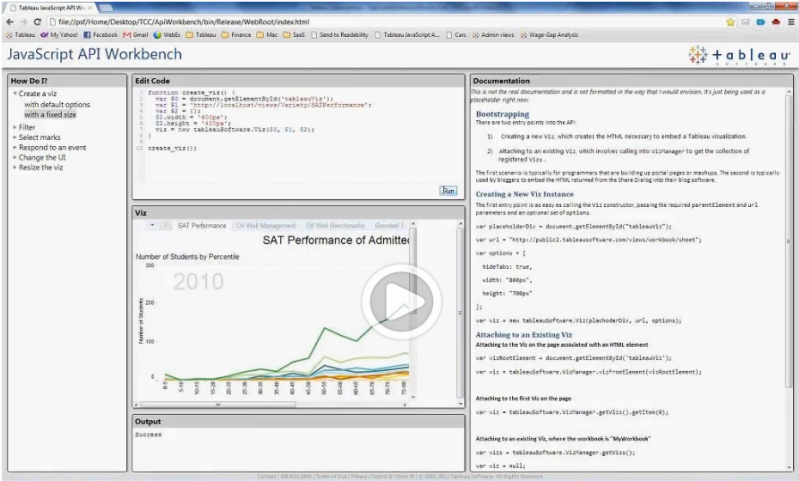

For Web Development Tableau has now brand new JavaScript API to customize selection, filtering, triggers to events, custom toolbar, etc. Tableau 8 has own JavaScript API WorkBench, which can be used right from you browser:

TDE API allows to build own TDE on any machine with Python, C/C++ and Java (see 24:53 at http://www.tableausoftware.com/tcc12conf/videos/new-tableau-server-8 ). Additionally Server API (REST API) allows programmatically create/enable/suspend sites and add/remove users to sites.

In addition to Faster Uploads andPublishing Data Sources, users can Publish Filters as Set and User Filters. Data Sources can be Refreshed or Appended instead of republishing – all from Local Sources. Such Refreshes can scheduled using Windows Task Scheduler or other task scheduling software on client devices – this is a real TDE proliferation!

My wishlist for Tableau 8 Server: all Tableau Server processes needs to be 64-bit (and they still 32-bit, see it here: http://onlinehelp.tableausoftware.com/v7.0/server/en-us/processes.htm ; they are way overdue to be the 64-bit; Linux version of Tableau Server (Microsoft recently changed very unfavorably the way they charge users for each Client Access) is needed, I wish integration with R Library (Spotfire has it for years), I want Backgrounder Processes (mostly doing data extracts on server) will not consume core licenses etc…

And yes, I found in San Diego even more individuals who found the better way to spend their time compare with attending Tableau 2012 Customer Conference and I am not here to judge:

New Tableau 8 Desktop features

I left Tableau 2012 conference in San Diego (where Tableau 8 was announced) a while ago with enthusiasm which you can feel from this real-life picture of 11 excellent announcers:

Conference was attended by 2200+ people and 600+ Tableau Software employees (Tableau almost doubled the number of employees in a year) and it felt like a great effort toward IPO (see also article here: http://www.bloomberg.com/news/2012-12-12/tableau-software-plans-ipo-to-drive-sales-expansion.html ). See some video here: TCC12 Keynote . Tableau 8 introduce 130+ new features, 3 times more then Tableau 7 did. Almost half of these new features are in Tableau 8 Desktop and this post about those new Desktop features (this is a repost from my Tableau Blog: http://tableau7.wordpress.com/2012/11/16/new-tableau-8-desktop-features/). New Tableau 8 Server features deserved a separate blog post which I will publish a little later after playing with Beta 1 and may be Beta 2.

A few days after conference the Tableau 8 Beta Program started with 2000+ participants. One of the most promising features is new rendering engine and I build special Tableau 7 visualization (and its port to Tableau 8) with 42000 datapoints: http://public.tableausoftware.com/views/Zips_0/Intro?:embed=y to compare the speed of rendering between versions 7 and 8:

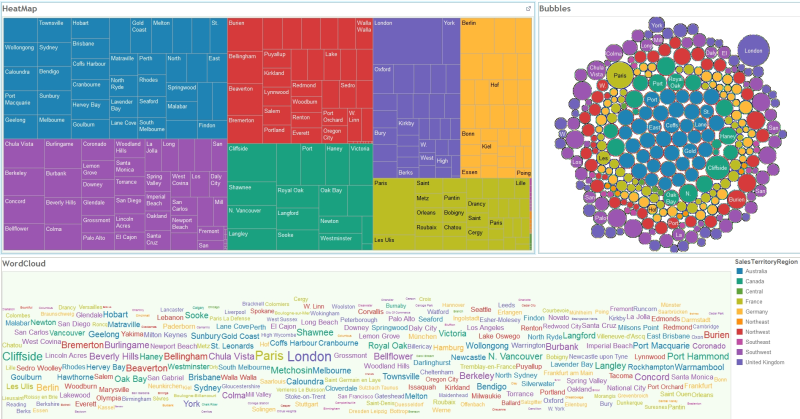

Among new features are new (for Tableau) visualization types: Heatmap, “Packed” Bubble Chart and Word Cloud, and I build simple Tableau 8 Dashboard to test it (all 3 are visualizing the 3-dimensional set where 1 dimension used as list of items, 1 measure used for size and 2nd measure used for color of items):

List of new features includes improved Sets (comparing members vs. non-members, adding/removing members, combining Sets: all-in-both, shared-by-both, left-except-right, right-except-left), Custom SQL with parameters, Freeform Dashboards (I still prefer MDI UI where each Chart/View Sheet has own Child Window as oppose to Pane), ability to add multiple fields to Labels, optimized label placement, built-in statistical models for visual Forecasting, Visual Grouping based on your data selection, Redesigned Mark Card (for Color, Size, Label, Detail and Tooltip Shelves).

New Data features include data blending without mandatory linked field in a view and with ability to filter data in secondary data sources; refreshing server-based Data Extracts can be done from local data sources; Data Filters (in addition be either local or global) can be shared now among selected set of worksheets and dashboards. Refresh of Data Extract can be done using command prompt for Tableau Desktop, for example

>tableau.exe refreshremoteextract

Tableau 8 has (finally) API (C/C++, Python, Java) to directly create a Tableau Data Extract (TDE) file, see example here: http://ryrobes.com/python/building-tableau-data-extract-files-with-python-in-tableau-8-sample-usage/

Tableau 8 (both Desktop and Server) can then connect to this extract file natively! Tableau provides new native connection for Google Analytics and Saleforce.com. TDE files now much smaller (especially with text values) – up to 40% smaller compare with Tableau 7.

Tableau 8 has performance enhancements, such as the new ability to use hardware accelerators (of modern graphics cards), computing views within dashboard in parallel (in Tableau 7 it was consecutive computations) and new performance recorder allows to estimate and tune a workload of various activities and functions and optimize the behavior of workbook.

I still have a wishlist of features which are not implemented in Tableau and I hope some them will be implemented later: all Tableau processes are 32-bit (except 64-bit version of data engine for server running on 64-bit OS) and they are way overdue to be the 64-bit; many users demand MAC version of Tableau Desktop and Linux version of Tableau Server (Microsoft recently changed very unfavorably the way they charge users for each Client Access), I wish MDI UI for Dashboards where each view of each worksheet has own Window as oppose to own pane (Qlikview does it from the beginning of the time), I wish integration with R Library (Spotfire has it for years), scripting languages and IDE (preferably Visual Studio), I want Backgrounder Processes (mostly doing data extracts on server) will not consume core licenses etc…

Despite the great success of the conference, I found somebody in San Diego who did not pay attention to it (outside was 88F, sunny and beautiful):

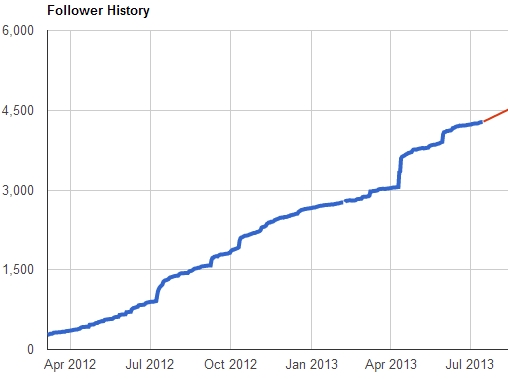

Google+ extension of this blog: 4277+ followers

On May 3rd of 2012 the Google+ extension http://tinyurl.com/VisibleData of this Data Visualization blog reached 500+ followers, on July 9 it got 1000+ users, on October 11 it had already 2000+ users, 11/27/12 my G+ Data Visualization Page has 2190+ followers and still growing every day (updated as of 12/01/12: 2500+ followers.

One of reasons of course is just a popularity of Data Visualization related topics and other reason covered in interesting article here:

http://www.computerworld.com/s/article/9232329/Why_I_blog_on_Google_And_how_ .

In any case, it helped me to create a reading list for myself and other people, base on feedback I got. According to CicleCount, as of 11/13/12 update, my Data Visualization Google+ Page ranked as #178 most popular page in USA. Thank you G+ !

Update 5/25/13: G+ extension of this blog now has 3873+ followers and as of 7/15/13 as of 4277+ followers):

Qlikview can go outside RAM, finally

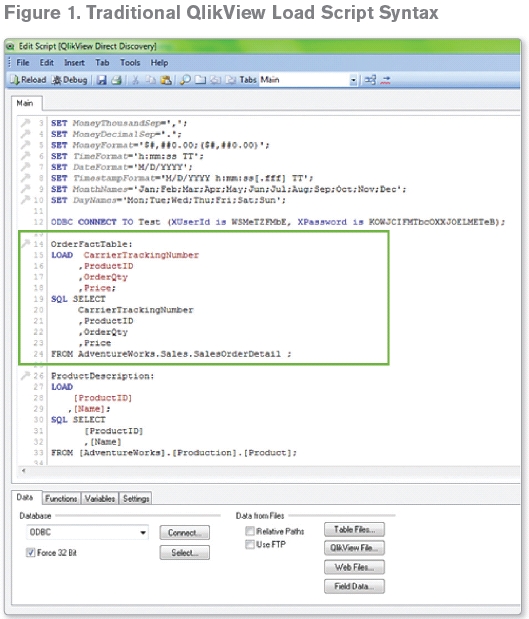

Qlikview 10 was released around 10/10/10, Qlikview 11 – around 11/11/11, so I expected Qlikview 12 to be released on 12/12/12 but “instead” we are getting Qlikview 11.2 with Direct Discovery in December 2012, which supposedly provides a “hybrid approach so business users can get the QlikView associative experience even with data that is not stored in memory”

This feature demanded by users (me included) for a long time, but I think noise around so called Big Data and competition forced Qliktech to do it. Spotfire has it for a longtime (as well as 64-bit implementation) and Tableau has something like that for a while (unfortunately Tableau still 32-bit) . You can test Beta of it, if you have time: http://community.qlikview.com/blogs/technicalbulletin/2012/10/22/qlikview-direct-discovery-beta-registration-is-open

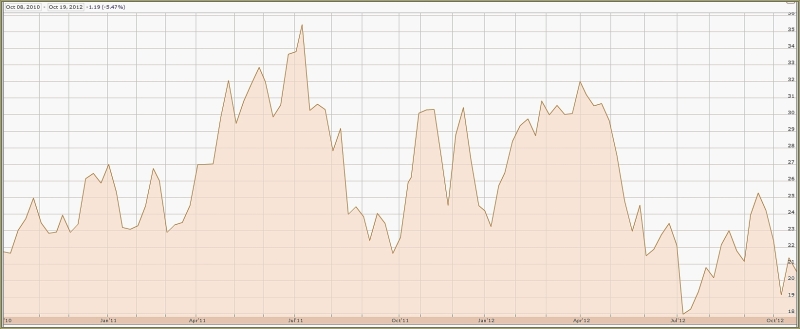

Just 8 months ago Qliktech estimated its sales for 2012 as $410M and suddenly 3 months ago it changed its estimates down to $381M, just 19% over 2011, which is in huge contrast with Qliktech’s previous speed of growth and way behind the current speed of growth of Tableau and even less then current speed of growth of Spotfire. During last 2 years QLIK stock unable to grow significantly:

and all of the above forcing Qliktech to do something outside of gradual improvements – new and exciting functionality needed and Direct Discovery may help!

QlikView Direct Discovery enables users to perform visual analysis against “any amount of data, regardless of size”. With the introduction of this unique hybrid approach, users can associate data stored within big data sources directly alongside additional data sources stored within the QlikView in-memory model. QlikView can “seamlessly connect to multiple data sources together within the same interface”, e.g. Teradata to SAP to Facebook allowing the business user to associate data across the data silos. Data outside of RAM can be joined with the in-memory data with the common field names. This allows the user associatively navigate both on the direct discovery and in memory data sets.

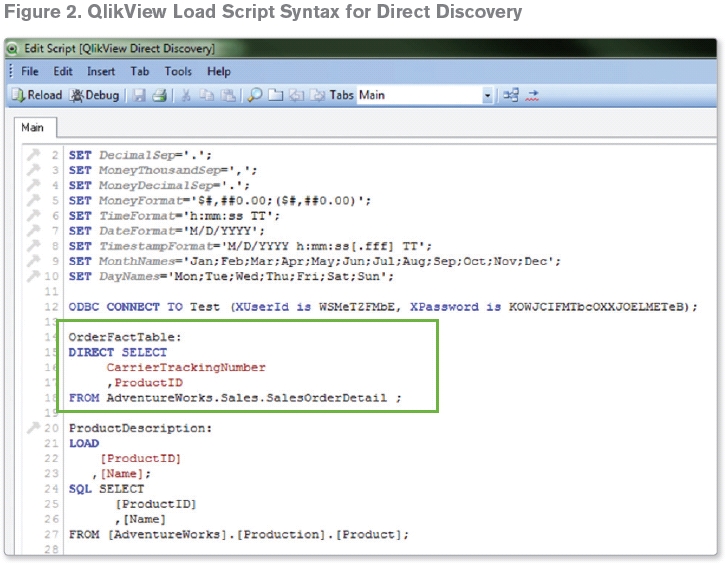

QlikView developer should setup the Direct Discovery table on the QlikView application load script to allow the business users to query the desired big data source. Within the script editor a new syntax is introduced to connect to data in direct discovery form. Traditionally the following syntax is required to load data from a database table:

To invoke the direct discovery method, the keyword “SQL” is replaced with “DIRECT”.

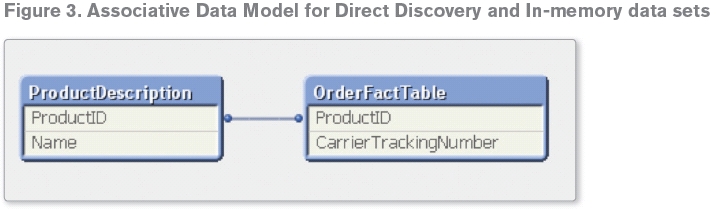

In the example above only column CarrierTrackingNumber and ProductID are loaded into QlikView in the traditional manner, other columns exist in the data table within the Database including columns OrderQty and Price. OrderQty and Price fields are referred as “IMPLICIT” fields. An implicit field is a field that QlikView is aware of on a “meta level”. The actual data of an implicit field resides only in the database but the field may be used in QlikView expressions. Looking at the table view and data model of the direct discovery columns are not within the model (on the OrderFact table):

Once the direct discovery structure is established, the direct discovery data can be joined with the in-memory data with the common field names (Figure 3). In this example, “ProductDescription” table is loaded in-memory and joined to direct discovery data with the ProductID field. This allows the user to associatively navigate both on the “direct discovery” and in memory data sets.

Direct Discovery will be much slow then in-memory processing and this is is expected, but it will take away from Qlikview its usual claim that is is faster then competitors. QlikView Direct Discovery can only be used against SQL compliant data sources. The following data sources are supported;

• ODBC/OLEDB data sources – All ODBC/OLEDB sources are supported, including SQL Server, Teradata and Oracle.

• Custom connectors which support SQL – Salesforce.com, SAP SQL Connector, Custom QVX connectors for SQL compliant data stores.

Due to the interactive and SQL syntax specific nature of the Direct Discovery approaches a number of limitations exist. The following chart types are not supported;

• Pivot tables

• Mini charts

And the following QlikView features are not supported:

• Advanced aggregation

• Calculated dimensions

• Comparative Analysis (Alternate State) on the QlikView objects that use Direct

Discovery fields

• Direct Discovery fields are not supported on Global Search

• Binary load from a QlikView application with Direct Discovery table

Here is a some preliminary video about Direct Discovery, published by Qliktech:

It was interesting to me that just 2 days after Qliktech pre-anounced Direct Discovery it also partners with Teradata. Tableau partners with Teradata for a while and Spotfire did it a month ago, so I guess Qliktech trying to catchup in this regard as well. I mentioned it only to underscore the point of this blog post: Qliktech realized that it behind its competitors in some areas and it has to follow ASAP.

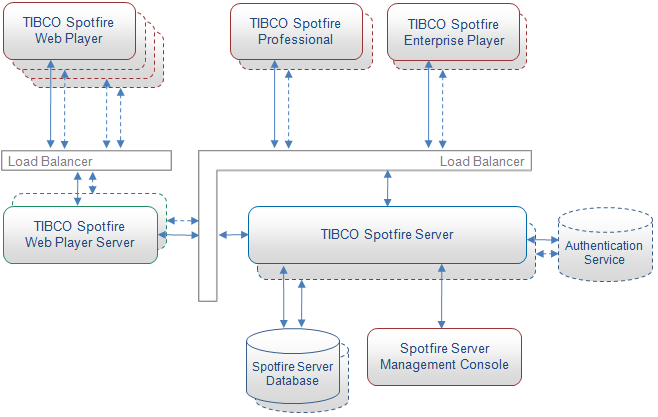

Spotfire 5 is announced

Today TIBCO announced Spotfire 5, which will be released in November 2012. Two biggest news are the access to SQL Server Analysis Services cubes and the integration with Teradata “by pushing all aggregations, filtering and complex calculations used for interactive visualization into the (Teradata) database”.

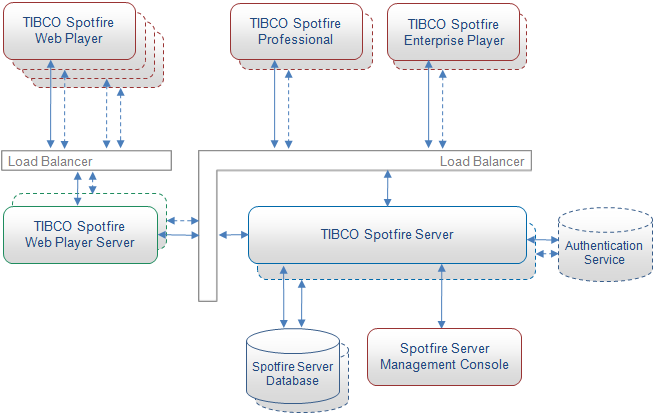

Spotfire team “rewrote” its in-memory engine for v. 5.0 to take advantage of high-capacity, multi-core servers. “Spotfire 5 is capable of handling in-memory data volumes orders of magnitude greater than the previous version of the Spotfire analytics platform” said Lars Bauerle, vice president of product strategy at TIBCO Spotfire.

Another addition is “in-database analysis” which allows to apply analytics within the database platforms (such as Oracle, Microsoft SQL Server and Teradata) without extracting and moving data, while handling analyses on Spotfire server and returning result sets back to the database platform.

Spotfire added new Tibco Enterprise Runtime for R, which embeds R runtime engine into the Spotfire statistical server. TIBCO claims that Spotfire 5.0 scales to tens of thousands of users! Spotfire 5 is designed to leverage the full family of TIBCO business optimization and big data solutions, including TIBCO LogLogic®, TIBCO Silver Fabric, TIBCO Silver® Mobile, TIBCOBusinessEvents®, tibbr® and TIBCO ActiveSpaces®.

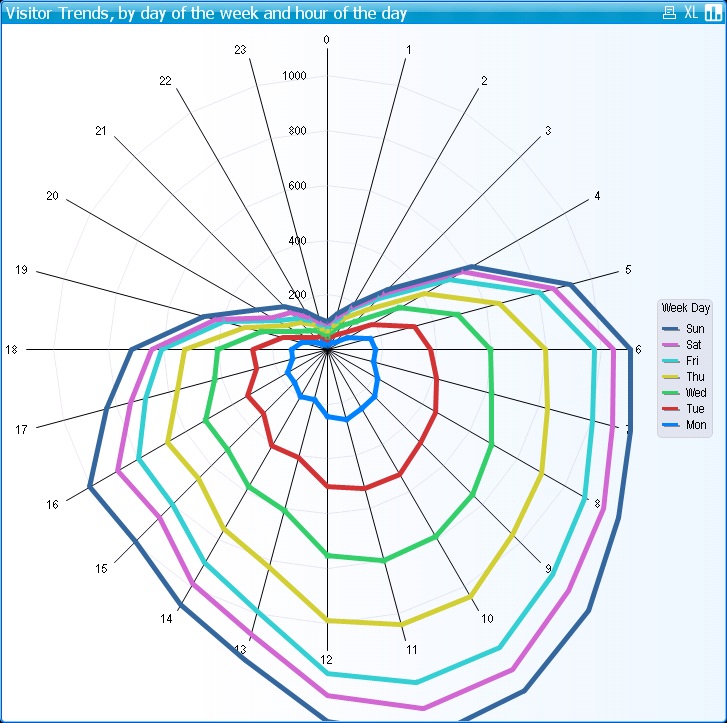

Data Visualization Seminar at MassTLC 9/20/12

The Mass Technology Leadership Council (MassTLC) organized today the Data Visualization Panel in their series of “Big Data Seminars”:

http://www.masstlc.org/events/event_details.asp?id=243502

and they invited me to be a Speaker and Panelist together with Irene Greif (Fellow @IBM) and Martin Leach (CIO @Broad Institute). Most interesting about this event was that it was sold out and about 150 people came to participate, even it was most productive time of the day (from 8:30am until 10:30am). Compare with what I observed just a few years ago, I sensed the huge interest to Data Visualization, base on multiple, very interesting and relevant questions I got from event participants.

Power View in Excel 2013

I doubt that Microsoft is paying attention to my blog, but recently they declared that Power View now has 2 versions: one for SharePoint (thanks, but no thanks) and one for Excel 2013. In other words, Microsoft decided to have own Desktop Visualization tool. In combination with PowerPivot and SQL Server 2012 it can be attractive for some Microsoft-oriented users but I doubt it can compete with Data Visualization Leaders – too late.

Most interesting is the note about Power View 2013 on Microsoft site: “Power View reports in SharePoint are RDLX files. In Excel, Power View sheets are part of an Excel XLSX workbook. You can’t open a Power View RDLX file in Excel, and vice versa. You also can’t copy charts or other visualizations from the RDLX file into the Excel workbook.“

But most amazing is that Microsoft decided to use the dead Silverlight for Powerview: “Both versions of Power View need Silverlight installed on the machine.” And we know that Microsoft switched to HTML5 from Silverlight and no new development planned for Silverlight! Good luck with that…

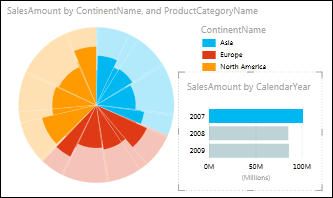

And yes, you can add now maps (Bing of course), see it here:

LinkedIn Stats about Data Visualization tools

I used LinkedIn for years to measure of how many people mentioning Data Visualization tools on their profiles, of how many LinkedIn groups dedicated to those DV tools and what group membership is. Recently these statistics show dramatic changes in favor of Qlikview and Tableau as undisputed leaders in people’s opinions.

Here is how many people mentioned specific tools (statistics were updated on 9/4/12 and numbers changing every day) on their profiles:

- Tableau – 18584,

- Qlikview – 17471,

- Spotfire – 3829,

- SAS+JMP – 3443,

- PowerPivot – 2335

Sample of “People” search URL: http://www.linkedin.com/search/fpsearch?type=people&keywords=Tableau or http://www.linkedin.com/search/fpsearch?type=people&keywords=SAS+JMP

Here is how many groups dedicated to [in brackets a “pessimistic” estimate of total non-overlapping membership]:

- Qlikview – 169 [13000+],

- Tableau – 76 [6000+],

- Spotfire – 29 [2000+],

- SAS (+AND+) JMP – 23 [2000+],

- PowerPivot – 16 [2000+]

Sample of “Group” search URL: http://www.linkedin.com/search-fe/group_search?pplSearchOrigin=GLHD&keywords=Qlikview

Advizor Analyst vs. Tableau or Qlikview…

I feel guilty for many months now: I literally do not have time for project I wish to do for a while: to compare Advizor Analyst and other Visual Discovery products from Advizor Solutions, Inc. with leading Data Visualization products like Tableau or Qlikview. I am asking visitors of my blog to volunteer and be a guest blogger here; the only pre-condition here is: a guest blogger must be the Expert in Advizor Solutions products and equally so in on of these 3: Tableau, Qlikview or Spotfire.

ADVIZOR’s Visual Discovery™ software is built upon strong data visualization technology spun out of a research heritage at Bell Labs that spans nearly two decades and produced over 20 patents. Formed in 2003, ADVIZOR has succeeded in combining its world-leading data visualization and in-memory-data-management expertise with predictive analytics to produce an easy to use, point and click product suite for business analysis.

Advizor has many Samples, Demos and Videos on its site: http://www.advizorsolutions.com/gallery/ and some web Demos, like this one

http://webnav.advizorsolutions.net/adv/Projects/demo/MutualFunds.aspx but you will need the Silverlight plugin for your web browser installed.

If you think that Advizor can compete with Data Visualization leaders and you have interesting comparison of it, please send it to me as MS-Word article and I will publish it here as a guest blog post. Thank you in advance…

Tableau as the front-end for Big Data

(this is a repost from my other blog: http://tableau7.wordpress.com/2012/06/09/tableau-and-big-data/ )

Big Data can be useless without multi-layer data aggregations, hierarchical or cube-like intermediary Data Structures, when ONLY a few dozens, hundreds or thousands data-points exposed visually and dynamically every single viewing moment to analytical eyes for interactive drill-down-or-up hunting for business value(s) and actionable datum (or “datums” – if plural means data). One of best expression of this concept (at least how I interpreted it) I heard from my new colleague who flatly said:

“Move the function to the data!”

I got recently involved with multiple projects using large data-sets for Tableau-based Data Visualizations (100+ millions of rows and even Billions of records!). Some of largest examples of their sizes I used were: 800+ millions of records and other was 2+ billions of rows.

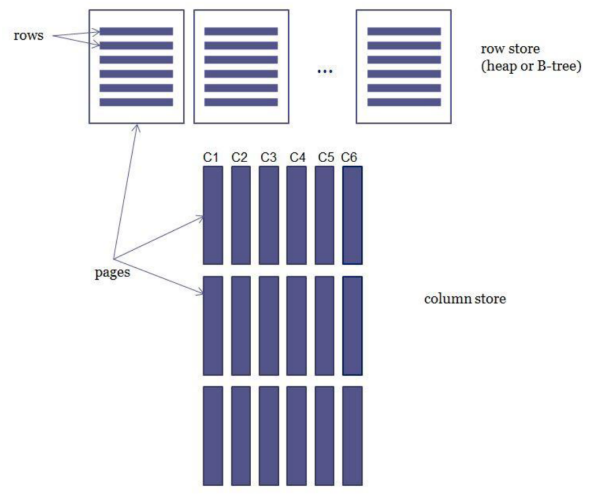

So this blog post is to express my thoughts about such Big Data (in average examples above have about 1+ KB per CSV record before compression and other advanced DB tricks, like columnar Databases used by Data Engine of Tableau) as back-end for Tableau.

Here are some Factors involved into Data Delivery from main and designated Database (Back-ends like Teradata, DB2, SQL Server or Oracle) for Tableau-based Big Data Visualizations) into “local” Tableau Visualizations (many people still trying to use Tableau as a Reporting tool as oppose to (Visual) Analytical Tool:

-

Queuing thousands of Queries to Database Server. There is no guarantee your Tableau query will be executed immediately; in fact it WILL be delayed.

-

Speed of Tableau Query when it will start to be executed depends on sharing CPU cycles, RAM and other resources with other queries executed SIMULTANEOSLY with your query.

-

Buffers, pools and other resources available for particular user(s) and queries at your Database Server are different and depends on privileges and settings given to you as a Database User

-

Network speed: between some servers it can be 10Gbits (or even more), in most cases it is 1Gbit inside server rooms, outside of server rooms I observed in many old buildings (over wired Ethernet) max 100Mbits coming into user’s PC; in case if you using Wi-Fi it can be even less (say 54 Mbits?). If you are using internet it can be even less (I observed speed in some remote offices as 1 Mbit or so over old T-1 lines); if you using VPN it will max out at 4Mbits or less (I observed it in my home office).

-

Utilization of network. I use Remote Desktop Protocol – RDP to VM (from my workstation or notebook; (VM or VDI Virtual Machine, sitting in server room) and connected to servers with network speed of 1Gbit, but it still using maximum 3% of network speed (about 30 MBits, which is about 3 Megabytes of data per second, which is probably about few thousands of records per seconds.

That means that network may have a problem to deliver 100 millions of records to “local” report overnight (say 10 hours, 10 millions of records per hour, 3000 records per second) – partially and probably because of factors 4 above.

On top of those factors please keep in mind that Tableau is a set of 32-bit applications (with exception of one out of 7 processes on Server side), which is restricted to 2GB of RAM; if data-set cannot fit into RAM, than Tableau Data Engine will use the disk as Virtual RAM, which is much, much slower and for some users such disk space actually not local to his/her workstation and mapped to some “remote” network file server.

Tableau desktop is using in many cases 32-bit ODBC drivers, which may even add more delay into data delivery into local “Visual Report”. As we learned from Tableau support itself, even with latest Tableau Server 7.0.X, the RAM allocated for one user session restricted to 3GB anyway.

Unfortunate Update: Tableau 8.0 will be 32-bit application again, but may be follow up version 8.x or 9 (I hope) will be ported to 64-bits… It means that Spotfire, Qlikview and even PowerPivot will keep some advantages over Tableau for a while…

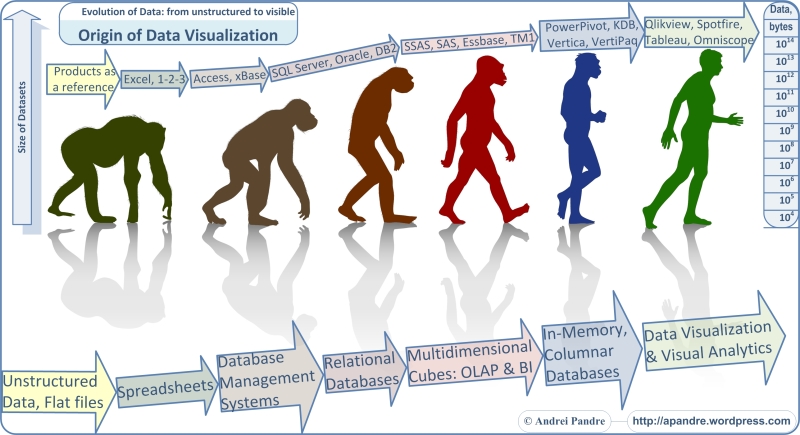

Data Visualization tool is a Presentation Tool

(this is a repost from my other Data Visualization blog: http://tableau7.wordpress.com/2012/05/31/tableau-as-container/ )

Often I used small Tableau (or Spotfire or Qlikview) workbooks instead of PowerPoint, which are proving at least 2 concepts:

-

Good Data Visualization tool can be used as the Web or Desktop Container for Multiple Data Visualizations (it can be used to build a hierarchical Container Structures with more then 3 levels; currently 3: Container-Workbooks-Views)

-

It can be used as the replacement for PowerPoint; in example below I embedded into this Container 2 Tableau Workbooks, one Google-based Data Visualization, 3 image-based Slides and Textual Slide: http://public.tableausoftware.com/views/TableauInsteadOfPowerPoint/1-Introduction

-

Tableau (or Spotfire or Qlikview) is better then PowerPoint for Presentations and Slides

-

Tableau (or Spotfire or Qlikview) is the Desktop and the Web Container for Web Pages, Slides, Images, Texts

-

Good Visualization Tool can be a Container for other Data Visualizations

-

Sample Tableau Presentation above contains the Introductory Textual Slide

-

Sample Tableau Presentation above contains a few Tableau Visualization:This Tableau Presentation contains a Web Page with the Google-based Motion Chart Demo

-

The Drill-down Demo

-

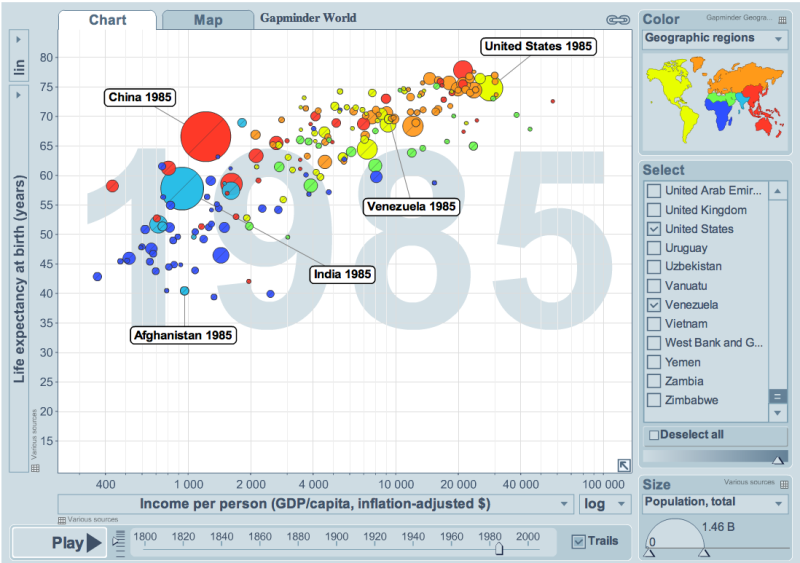

The Motion Chart Demo ( 6 dimensions: X,Y, Shape, Color, Size, Motion in Time)

-

-

This Tableau Presentation contains a few Image-based Slides:

-

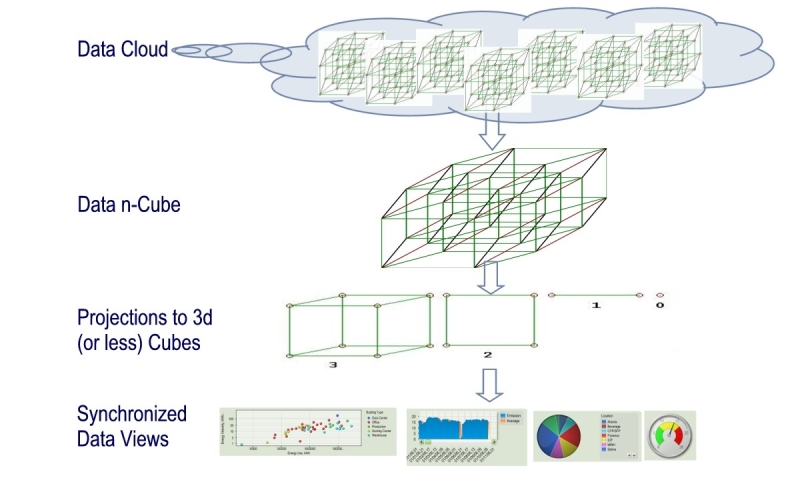

The Quick Description of Origins and Evolution of Software and Tools used for Data Visualizations during last 30+ years

-

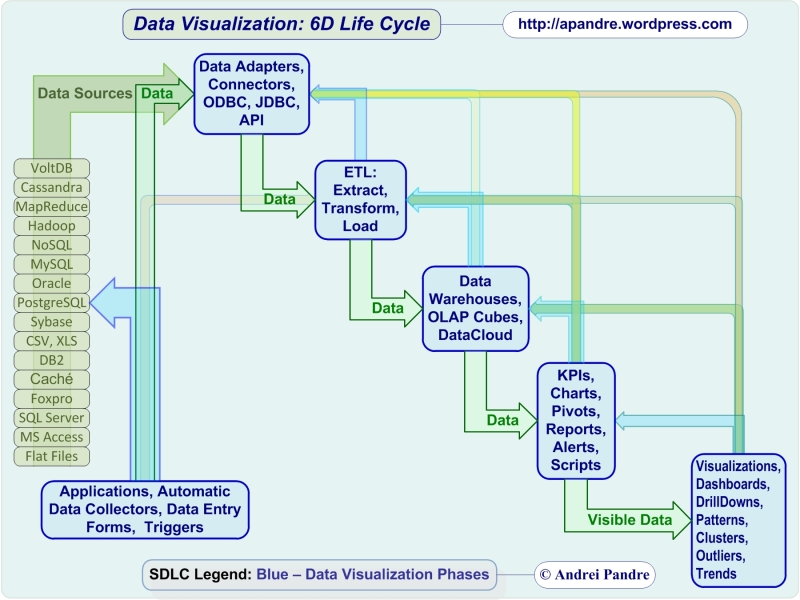

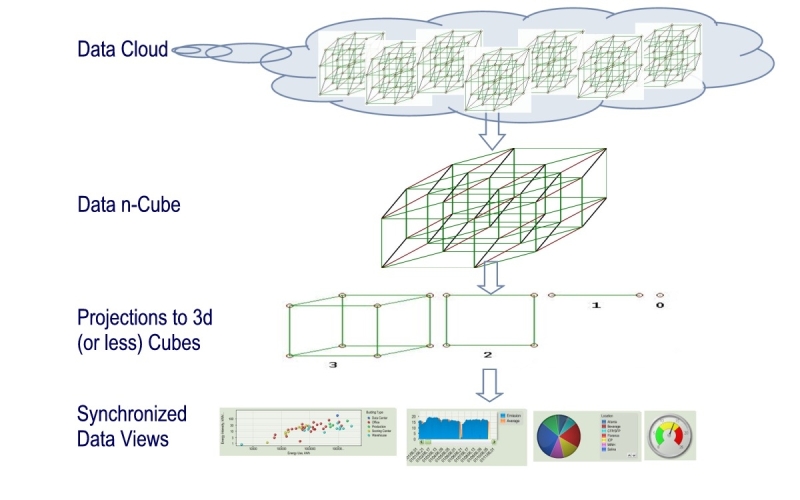

The Description of Multi-level Projection from Multidimensional Data Cloud to Datasets, Multidimensional Cubes and to Chart

-

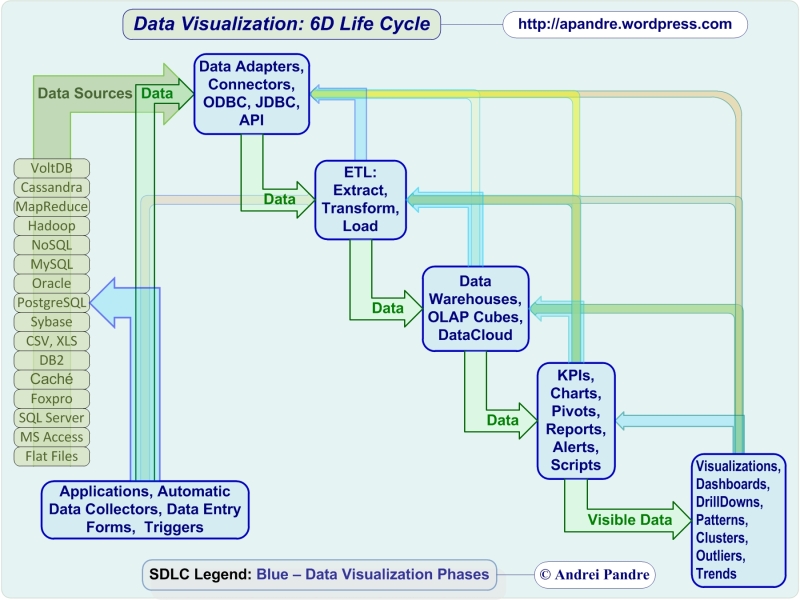

The Description of 6 stages of Software Development Life Cycle for Data Visualizations

-

Spotfire 4.5 is announced

TIBCO said Spotfire 4.5 will be available later this month (May 2012).

Among news and additions to Spotfire: it will include ADS connector to Hadoop, integration with SAS, Mathworks and Attivio engines and new deployment kit for iPad.

Tableau vs. Qlikview

Some people pushing me to answer on recent Donald Farmer’s comments on my previous post, but I need more time to think about it.

Meanwhile today Ted Cuzzillo published an interesting comparison of Qlikview vs. Tableau here:

http://datadoodle.com/2012/04/24/tableau-qlikview/

named “The future of BI in two words” which made me feel warm and fuzzy about both products and unclear about what Ted’s judgement is?

Fortunately I had a more “digitized” comparison of these 2 Data Visualization Leaders, which I did a while ago for a different reason. So I modified it a little to bring it up-to-date and you can see it for yourself below. Funny thing is that even I used 30+ criterias to measure and compare those two brilliant products, final score is almost identical for both of them, so it is still warm and fuzzy.

Basically conclusion is simple: each product is better for certain customers and for certain projects, there is no universal answer (yet?):

Power View: 3rd strike and Microsoft out?

The short version of this post: as far as Data Visualization is a concern, the new Power View from Microsoft is the marketing disaster, the architectural mistake and the generous gift from Microsoft to Tableau, Qlikview, Spotfire and dozens of other vendors.

For the long version – keep reading.

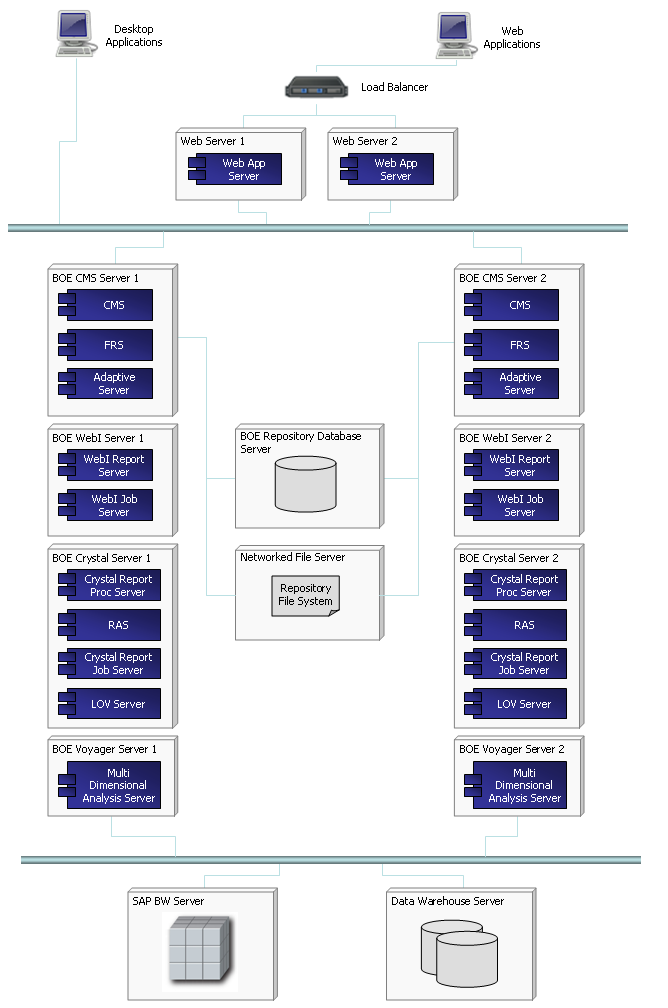

Assume for a minute (OK, just for a second) that new Power View Data Visualization tool from Microsoft SQL Server 2012 is almost as good as Tableau Desktop 7. Now let’s compare installation, configuration and hardware involved:

Tableau:

- Hardware: almost any modern Windows PC/notebook (at least dual-core, 4GB RAM).

- Installation: a) one 65MB setup file, b) minimum or no skills

- Configuration: 5 minutes – follow instructions on screen during installation.

- Price – $2K.

Power View:

- Hardware: you need at least 2 server-level PCs (each at least quad-core, 16GB RAM recommended). I will not recommend to use 1 production server to host both SQL Server and SharePoint; if you desperate, at least use VM(s).

- Installation: a) Each Server needs Windows 2008 R2 SP1 – 3GB DVD; b) 1st Server needs SQL Server 2012 Enterprise or BI Edition – 4GB DVD; c) 2nd Server needs SharePoint 2010 Enterprise Edition – 1GB DVD; d) A lot of skills and experience

- Configurations: Hours or days plus a lot of reading, previous knowledge etc.

- Price: $20K or if only for development it is about $5K (Visual Studio with MSDN subscription) plus cost of skilled labor.

As you can see, Power View simply cannot compete on mass market with Tableau (and Qlikview and Spotfire) and time for our assumption in the beginning of this post is expired. Instead now is time to remind that Power View is 2 generations behind Tableau, Qlikview and Spotfire. And there is no Desktop version of Power View, it is only available as a web application through web browser.

Power View is a Silverlight application packaged by Microsoft as a SQL Server 2012 Reporting Services Add-in for Microsoft SharePoint Server 2010 Enterprise Edition. Power View is (ad-hoc) report designer providing for user an interactive data exploration, visualization, and presentation web experience. Microsoft stopped developing Silverlight in favor of HTML5, but Silverlight survived (another mistake) within SQL Server team.

Previous report designers (still available from Microsoft: BIDS, Report Builder 1.0, Report Builder 3.0, Visual Studio Report Designer) are capable to produce only static reports, but Power View enables users to visually interact with data and drill-down all charts and Dashboard similar to Tableau and Qlikview.

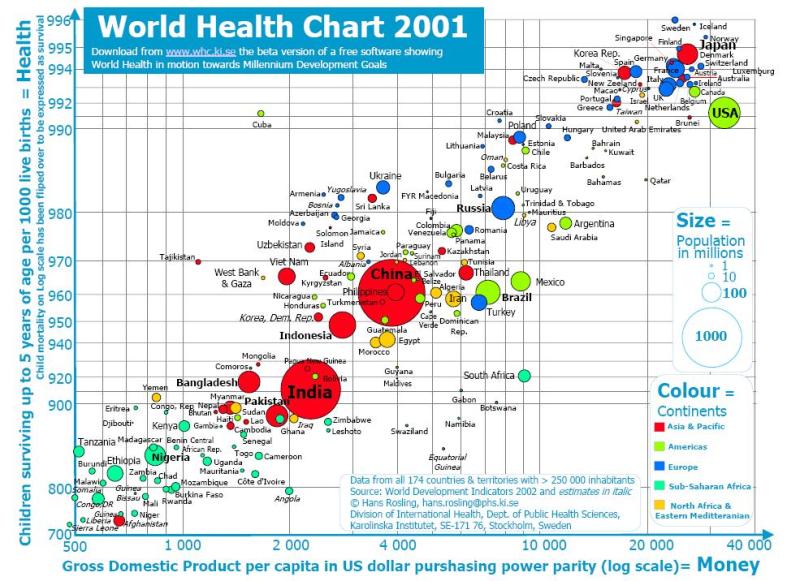

Power View is a Data Visualization tool, integrated with Microsoft ecosystem. Here is a Demo of how the famous Hans Rosling Data Visualization can be reimplemented with Power View:

Compare with previous report builders from Microsoft, Power View allows many new features, like Multiple Views in a Single Report, Gallery preview of Chart Images, export to PowerPoint, Sorting within Charts by measures and Categories, Multiple Measures in Charts, Highlighting of selected data in reports and Charts, Synchronization of Slicers (Cross-Filtering), Measure Filters, Search in Filters (convenient for a long lists of categories), dragging data fields into Canvas (create table) or Charts (modify visualization), convert measures to categories (“Do Not Summarize”), and many other features.

As with any of 1st releases from Microsoft, you can find some bugs from Power View. For example, KPIs are not supported in Power View in SQL Server 2012, see it here: http://cathydumas.com/2012/04/03/using-or-not-using-tabular-kpis/

Power View is not the 1st attempt to be a full player in Data Visualization and BI Market. Previous attempts failed and can be counted as Strikes.

Strike 1: The ProClarity acquisition in 2006 failed, there have been no new releases since v. 6.3; remnants of ProClarity can be found embedded into SharePoint, but there is no Desktop Product anymore.

Strike 2: Performance Point Server was introduced in November, 2007, and discontinued two years later. Remnants of Performance Point can be found embedded into SharePoint as Performance Point Services.

Both failed attempts were focused on the growing Data Visualization and BI space, specifically at fast growing competitors such as Qliktech, Spotfire and Tableau. Their remnants in SharePoint functionally are very behind of Data Visualization leaders.

Path to Strike 3 started in 2010 with release of PowerPivot (very successful half-step, since it is just a backend for Visualization) and xVelocity (originally released under name VertiPaq). Power View is continuation of these efforts to add a front-end to Microsoft BI stack. I do not expect that Power View will gain as much popularity as Qlikview and Tableau and in my mind Microsoft will be a subject of 3rd strike in Data Visualization space.

One reason I described in very beginning of this post and the 2nd reason is absence of Power View on desktop. It is a mystery for me why Microsoft did not implement Power View as a new part of Office (like Visio, which is a great success) – as a new desktop application, or as a new Excel Add-In (like PowerPivot) or as a new functionality in PowerPivot or even as a new functionality in Excel itself, or as new version of their Report Builder. None of these options preventing to have a Web reincarnation of it and such reincarnation can be done as a part of (native SSRS) Reporting Services – why involve SharePoint (which is – and I said it many times on this blog – basically a virus)?

I am wondering what Donald Farmer thinking about Power View after being the part of Qliktech team for a while. From my point of view the Power View is a generous gift and true relief to Data Visualization Vendors, because they do not need to compete with Microsoft for a few more years or may be forever. Now IPO of Qliktech making even more sense for me and upcoming IPO of Tableau making much more sense for me too.

Yes, Power View means new business for consulting companies and Microsoft partners (because many client companies and their IT departments cannot handle it properly), Power View has a good functionality but it will be counted in history as a Strike 3.

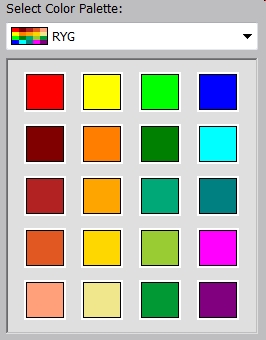

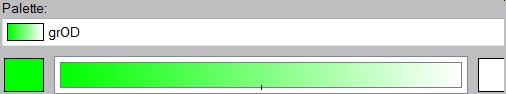

Palettes and Colors

(this is a repost from my Tableau blog: http://tableau7.wordpress.com/2012/04/02/palettes-and-colors/ )

I was always intrigued with colors and their usage, since my mom told me that may be ( just may be, there is no direct prove of it anyway) Ancient Greeks did not know what the BLUE color is – that puzzled me.

Later in my live, I realized that Colors and Palettes are playing the huge role in Data Visualization (DV) and it eventually led me to attempt to understand of how it can be used and pre-configured in advanced DV tools to make Data more Visible and to express the Data Patterns better. For this post I used Tableau to produce some palettes, but similar technique can be found in Qlikview, Spotfire etc.

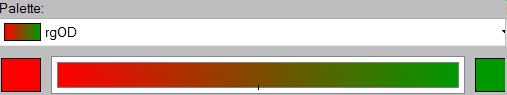

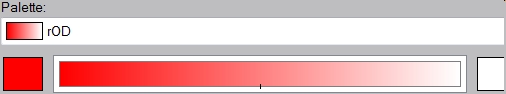

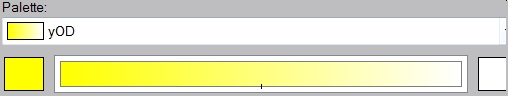

Tableau published the good article of how to create customized palettes here: http://kb.tableausoftware.com/articles/knowledgebase/creating-custom-color-palettes and I followed it below. As this article recommended, I modified default Preferences.tps file; see it below with images of respective Palettes embedded.

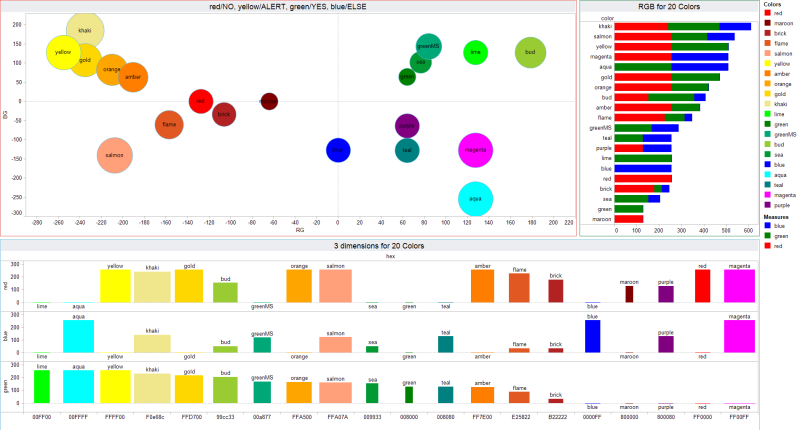

For the first, regular Red-Yellow-Green-Blue Palette with known colors with well-established names, I created even a Visualization in order to compare their Red-Green-Blue components and I even tried to placed respective Bubbles on 2-dimensional surface, even originally it is clearly a 3 dimensional Dataset (click on image to see it in full size):

For the 2nd Red-Yellow-Green-NoBlue Ordered Sequential Palette, I tried to implement the extended “Set of Traffic Lights without any trace of BLUE Color” (so Homer and Socrates will understand it the same way as we are) while trying to use only web-safe colors. Please keep in mind, that Tableau does not have a simple way to have more than 20 colors in one Palette, like Spotfire does.

Other 5 Palettes below are useful too as ordered-diverging almost “mono-chromatic” (except Red-Green Diverging, since it can be used in Scorecards when Red is bad and Green is good). So see below Preferences.tps file with my 7 custom palettes.

<?xml version=’1.0′?> <workbook> <preferences>

<color-palette name=”RegularRedYellowGreenBlue” type=”regular”>

<color>#FF0000</color> <color>#800000</color> <color>#B22222</color>

<color>#E25822</color> <color>#FFA07A</color> <color>#FFFF00</color>

<color>#FF7E00</color> <color>#FFA500</color> <color>#FFD700</color>

<color>#F0e68c</color> <color>#00FF00</color> <color>#008000</color>

<color>#00A877</color> <color>#99cc33</color> <color>#009933</color>

<color>#0000FF</color> <color>#00FFFF</color> <color>#008080</color>

<color>#FF00FF</color> <color>#800080</color>

<color-palette name=”RedYellowGreenNoBlueOrdered” type=”ordered-sequential” >

<color>#ff0000</color> <color>#cc6600</color> <color>#cccc00</color>

<color>#ffff00</color> <color>#99cc00</color> <color>#009900</color>

</color-palette>

<color-palette name=”RedToGreen” type=”ordered-diverging” >

<color>#ff0000</color> <color>#009900</color> </color-palette>

<color>#ff0000</color> <color>#009900</color> </color-palette>

<color-palette name=”RedToWhite” type=”ordered-diverging” >

<color>#ff0000</color> <color>#ffffff</color></color-palette>

<color>#ff0000</color> <color>#ffffff</color></color-palette>

<color-palette name=”YellowToWhite” type=”ordered-diverging” >

<color>#ffff00</color> <color>#ffffff</color></color-palette>

<color>#ffff00</color> <color>#ffffff</color></color-palette>

<color-palette name=”GreenToWhite” type=”ordered-diverging” >

<color>#00ff00</color> <color>#ffffff</color></color-palette>

<color>#00ff00</color> <color>#ffffff</color></color-palette>

<color-palette name=”BlueToWhite” type=”ordered-diverging” >

<color>#0000ff</color> <color>#ffffff</color> </color-palette>

<color>#0000ff</color> <color>#ffffff</color> </color-palette>

</preferences> </workbook>

In case if you wish to use the colors you like, this site is very useful to explore the properties of different colors: http://www.perbang.dk/rgb/

Free Tableau Reader enables Server-less Visualization!

(this is a repost from http://tableau7.wordpress.com/2012/03/31/tableau-reader/ )